I do not test my production code exhaustively. First, it is hard to test user layer code. Second, I am not sure that the application will stay unchanged. Tests add a significant drag to the development, so I want to use them wisely.

Instead I rely on paranoid coding style with real time error reporting. This proved to be a much better approach for our team. The software seems to work when expected, and if there are any real world cases when it does not - we find out about them very quickly.

Our production code looks very different from what you might expect - there are lots of assertions, especially at any boundary between components, modules, client vs server. The farther the two pieces of code that use each other are, the more assertions we use: the defensive distance principle.

1 | lazyAss(check.unemptyString(url), 'missing url', url); |

lazyAss is my

lazy assertion library, that

allows passing as many arguments as needed without paying the

performance penalty. check comes from check-types.js

library of convenient predicates.

Angularjs, lodash and check-types all come with lots of built-in predicates you can use,

see in defensive examples blog post.

We picked check-types.js to be our base library of assertions, but only use the predicates. It is very simple to extend check-types with new predicates to make code read as natural as possible.

1 | check.apiUrl = function (str) { |

Testing code

Our testing code uses Jasmine and used to look very standard. We are only including additional matchers from Jasmine-Matchers

1 | describe('check.apiUrl', function () { |

There are two problems with this testing code

problem 1: If a matcher fails, we do NOT get meaningful information. Instead we will get a message

expected false to be true for example, because any expression into expect() is

evaluated first producing true/false and then passed into the matcher. In order to pin point

the offending assertion we are then forced to add a message to the code, often repeating the

predicate source

1 | describe('check.apiUrl', function () { |

The unit tests became verbose and are repeating the same information.

problem 2: We are using two different tools to do essentially the same task. In production, we are using assertion function with any predicate, but in our specs we are using built-in plus additional Jasmine matchers. Sometimes we catch ourselves stopping and thinking "wait, this is production, I cannot use toBeArrayOfNumbers".

Removing matchers

Finally we have decided to solve both problems by not using the Jasmine matchers in our unit tests. Instead we are using the same lazy assertions with predicates as in production code. This is approach taken by mocha testing framework for example - use any assertion library that throws an exception, there are no built-in assertions.

describe('check.apiUrl', function () {

it('exists', function () {

lazyAss(check.fn(check.apiUrl));

});

it('passes valid urls', function () {

lazyAss(check.apiUrl('//api/v0/something'));

lazyAss(check.apiUrl('//api/v1/something/else'));

});

it('fails invalid', function () {

lazyAss(!check.apiUrl());

lazyAss(!check.apiUrl('v1'));

lazyAss(!check.apiUrl('/something/else'));

});

});

We solved the first problem (which assertion failed?) by including

lazy-ass-helpful

BDD.js

before the unit tests and replacing describe with helpDescribe function.

helpDescribe('check.apiUrl', function () {

it('exists', function () {

lazyAss(check.fn(check.apiUrl));

});

...

});

lazy-ass-helpful-bdd.js rewrites the callback function provided to helpDescribe

on the fly, placing the predicate source in each lazyAss into its own message.

Thus the above code will be equivalent to:

describe('check.apiUrl', function () {

it('exists', function () {

lazyAss(check.fn(check.apiUrl), 'condition [check.fn(check.apiUrl)]');

});

...

});

Using this approach provides tremendous benefit when a test fails: you get the context right away, without even looking at the spec file. For example, if one of the many assertions in negative tests fails (on purpose here)

it('fails invalid', function () {

lazyAss(!check.apiUrl());

lazyAss(!check.apiUrl('//api/v1'));

lazyAss(!check.apiUrl('/something/else'));

});

we will get

module check.apiUrl test fails invalid failed

condition [!check.apiUrl('//api/v1')]

This allows to get a sense of the failure at a glance, and encourages writing more assertions inside same test.

Bonus 1: variables in the predicate

lazy-ass-helpful also tries to determine variables inside the predicate expression and will add them to the arguments automatically (in addition the predicate source)

it('checks url', function () {

var url = 'something';

lazyAss(check.apiUrl(url));

});

This test fails and generates the following helpful message

module check.apiUrl test checks url failed

condition [check.apiUrl(url)] url: 'something'

Bonus 2: API documentation examples

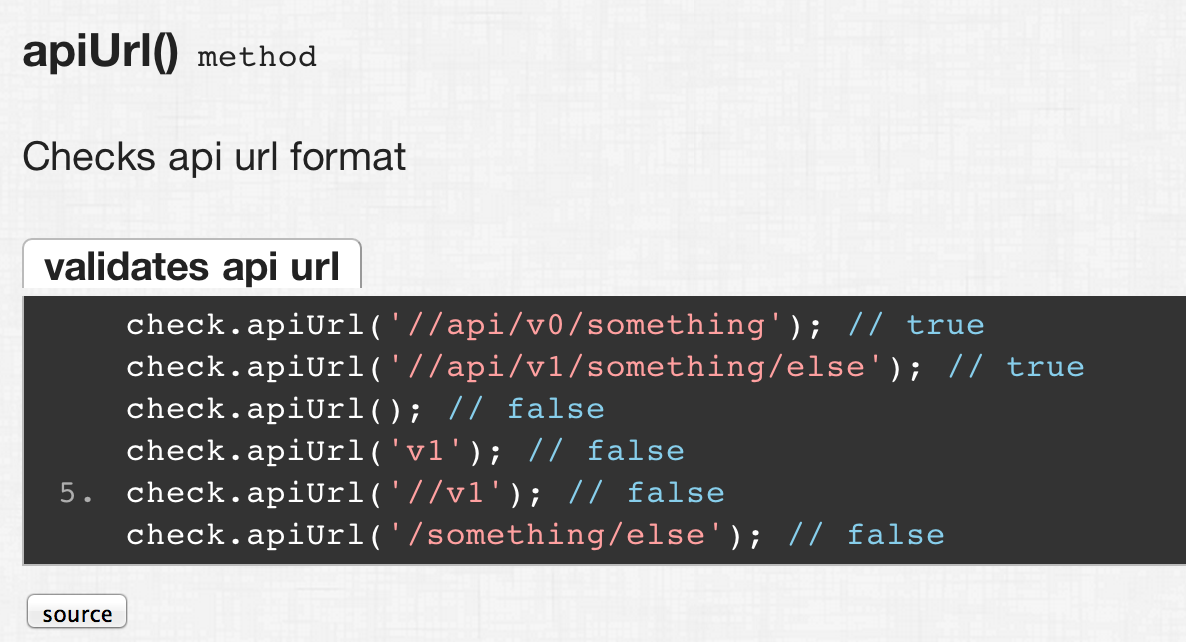

We generate javascript API docs using xplain. Instead of manually describing methods and examples, we just mark unit tests as samples for a function. Xplain will transform unit test source into human format and add as example to the generated docs

1 | /** |

and its unit tests

1 | helpDescribe('check.apiUrl', function () { |

We generate HTML docs using command xplain -i checks.js -i checks-spec.js which produces

the following doc

conclusion

We consolidated production and unit test code, picking assertions over matchers. Using additional tools we avoid repeating ourselves, but still get all the information necessary to debug a failed unit test. We also avoid tedious api documentation work by using the unit tests themselves as code examples.