Traditional code coverage tools like istanbul / nyc are useful to figure out if any code paths were NOT exercised by the unit tests. For example, they can show us if error handling was tested or not. In my previous blog posts I have shown how to use code coverage to perform many interesting things. Check out

- Live code coverage stream

- Not tested - not included!

- High MPG code coverage

- Code coverage proxy

- Code coverage by commit

- Accurate coverage number

Yet, code coverage is misleading in some situations. For example, imagine a function that checks if a given string is a valid email. We can quickly write simple code that appears to work

1 | function isEmail(s) { |

We can throw a unit test that achieves 100% code coverage!

1 | it('passes gmail emails', () => { |

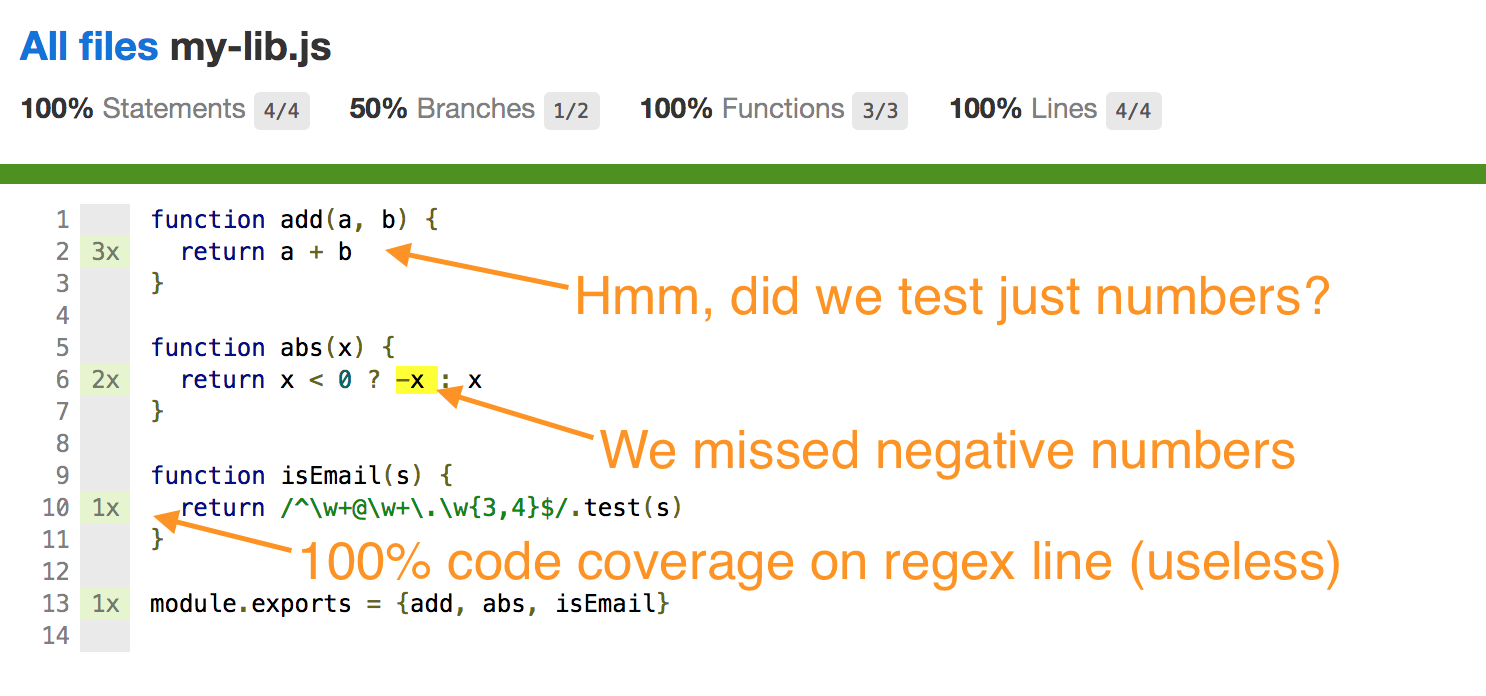

Here is the code coverage report for a few functions collected by the nyc.

We can see for example that we have tested addition line a + b, but we have no idea if

we have tested this line with just numbers or with strings (concatenation case). We can

see that we missed the code branch in the abs test. We can also see 100% code coverage

inside isEmail function.

Yet, the complete code coverage tells us nothing. Anyone who wants to understand if the code

handles 2 letter TLD domains (like [email protected]) has to hunt through all unit tests to see

if such email has been tested.

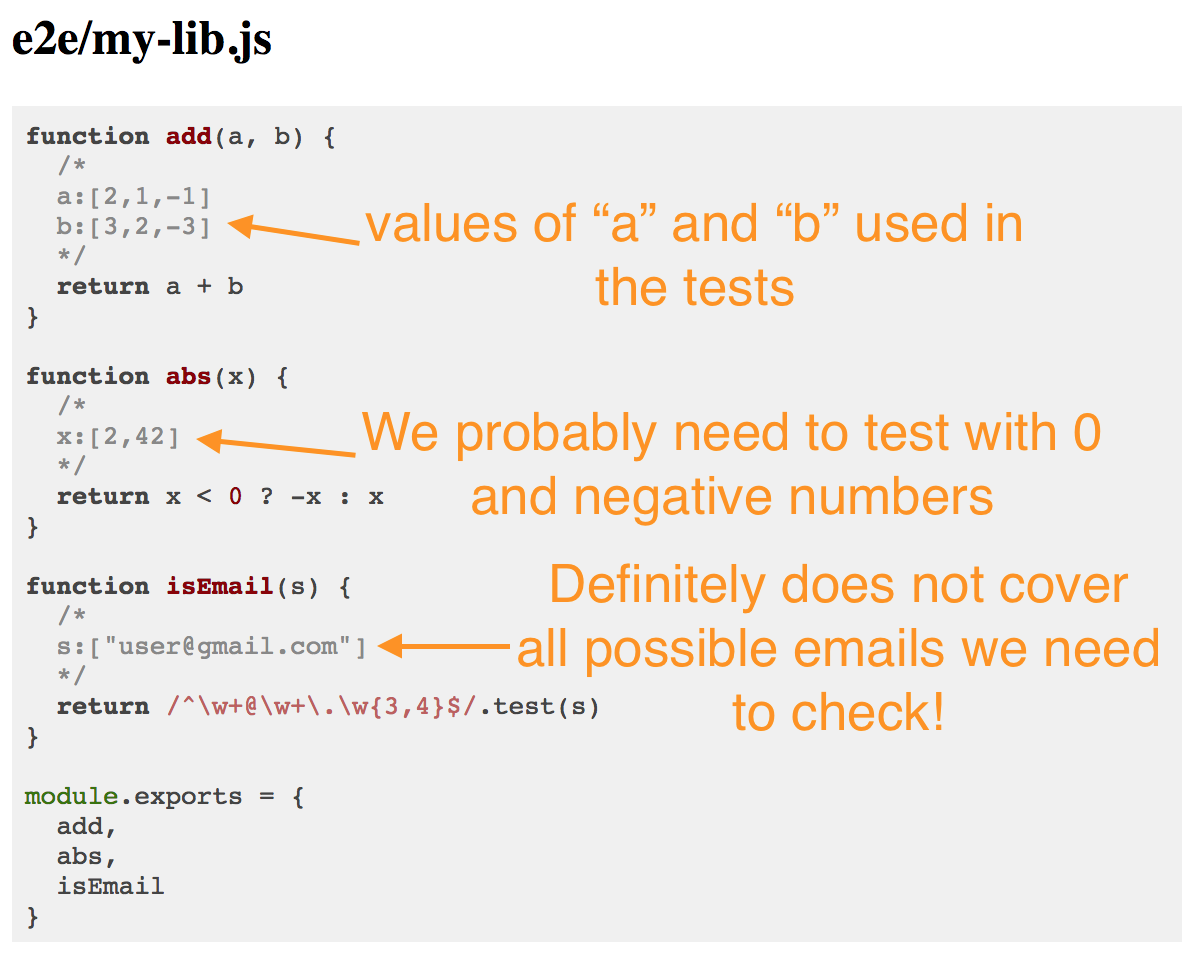

This is why I wrote data-cover. It is a module you preload when running your tests that instruments the source code and reports after tests finish for each function all data inputs it has received during unit tests. Here is the report it generates from the same tests.

For now, the collected argument values are just placed into the code as comments. I am thinking how to better summarize and report the missing data classes that should be tested.

Prediction

The data-cover is still in its inception, but I see

@ArtemGovorov of

Wallaby.js and

Quokka.js

fame grabbing this idea and running with it 😀