Cypress test runner includes Chai + Chai-jQuery + Sinon-Chai assertions. You can also Create Custom Assertions For Test Readability quite easily. In this blog post I want to show a different type of assertions that could be useful in your end-to-end tests: soft assertions.

A typical assertion fails the test if the condition is not satisfied

1 | expect(10).to.equal(20) |

Cypress can retry certain query commands if the attached assertions fail. The retry timeout is configured globally, or per test, or per command.

1 | cy.get('#todo li') |

But sometimes we want to check let's say the number of elements, but NOT fail the test if condition is false. For example, we might need to delete all existing todos before starting the test:

1 | cy.get('#todo li .destroy') |

This approach only works if there are items. If there are no items, then there is nothing to click and the test fails. The user will see "cannot find elements to click" error, but the real error is "we have no todo items". The test assumes there are Todo items, yet somehow the data list is empty. I would like to warn the user that the assumption that there are items is wrong. This is where the soft assertions come in handy. They could look something like this:

1 | cy.get('#todo li .destroy') |

Let's see how the soft assertions could help us understand the behavior of the application during the test better.

Example app

Our example application is TodoMVC from bahmutov/test-api-example.

1 | beforeEach(() => { |

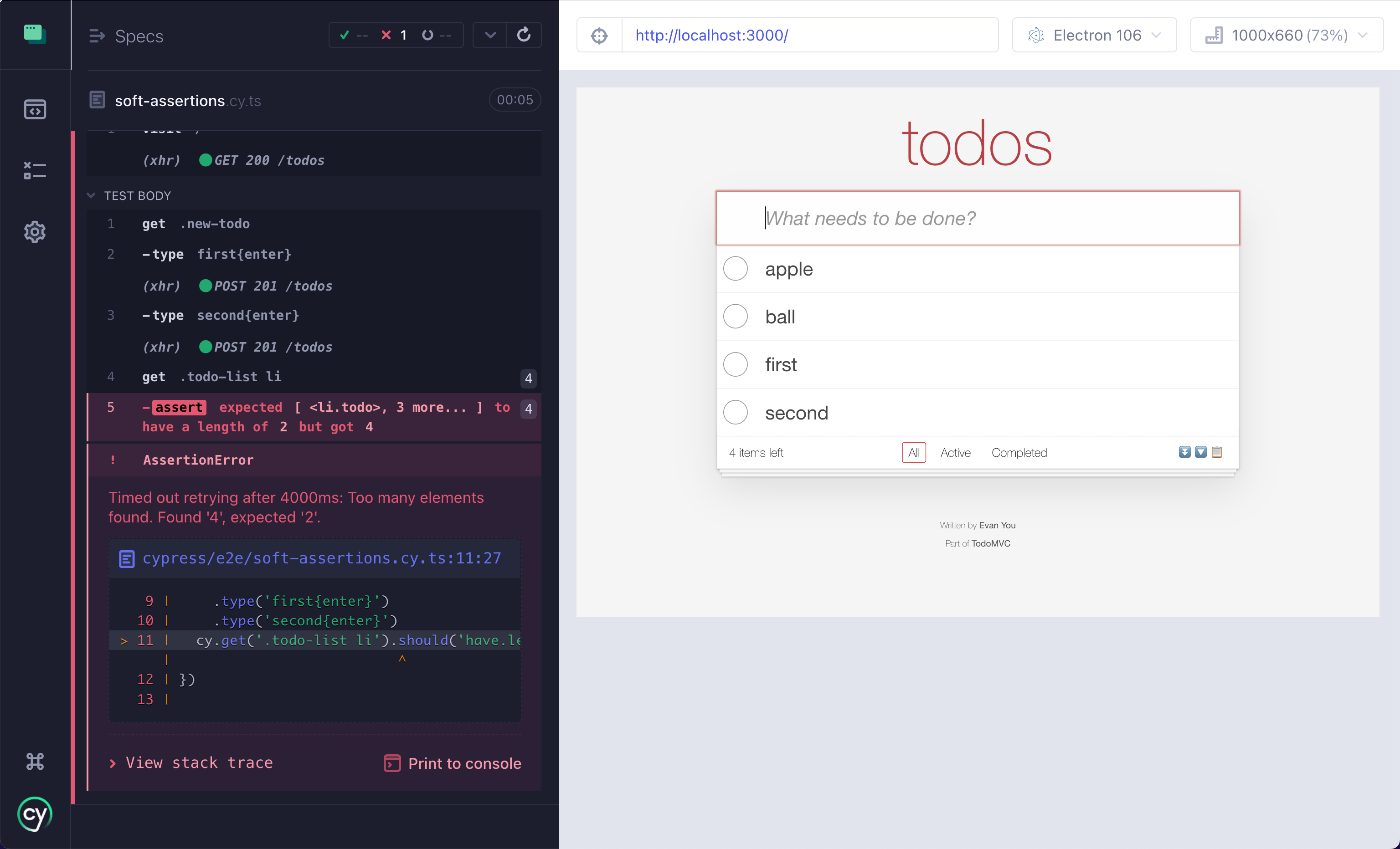

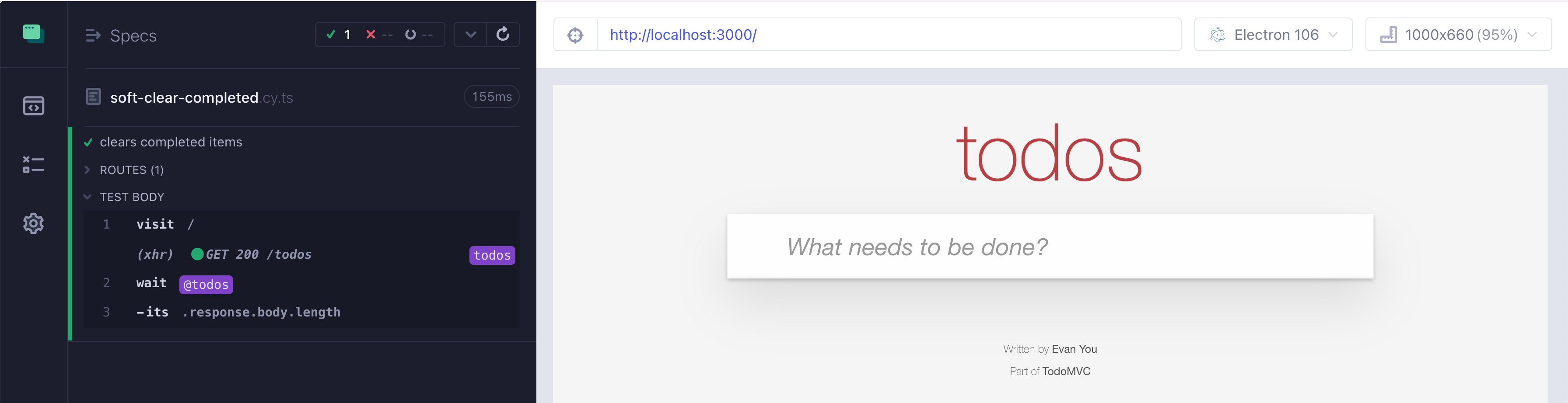

My first test run fails because there were a couple of todos left from some previous run

Soft assertions

Now let's see how the failed test could be understood better if we had additional soft assertions. Install the plugin cypress-soft-assertions

1 | $ npm i -D cypress-soft-assertions |

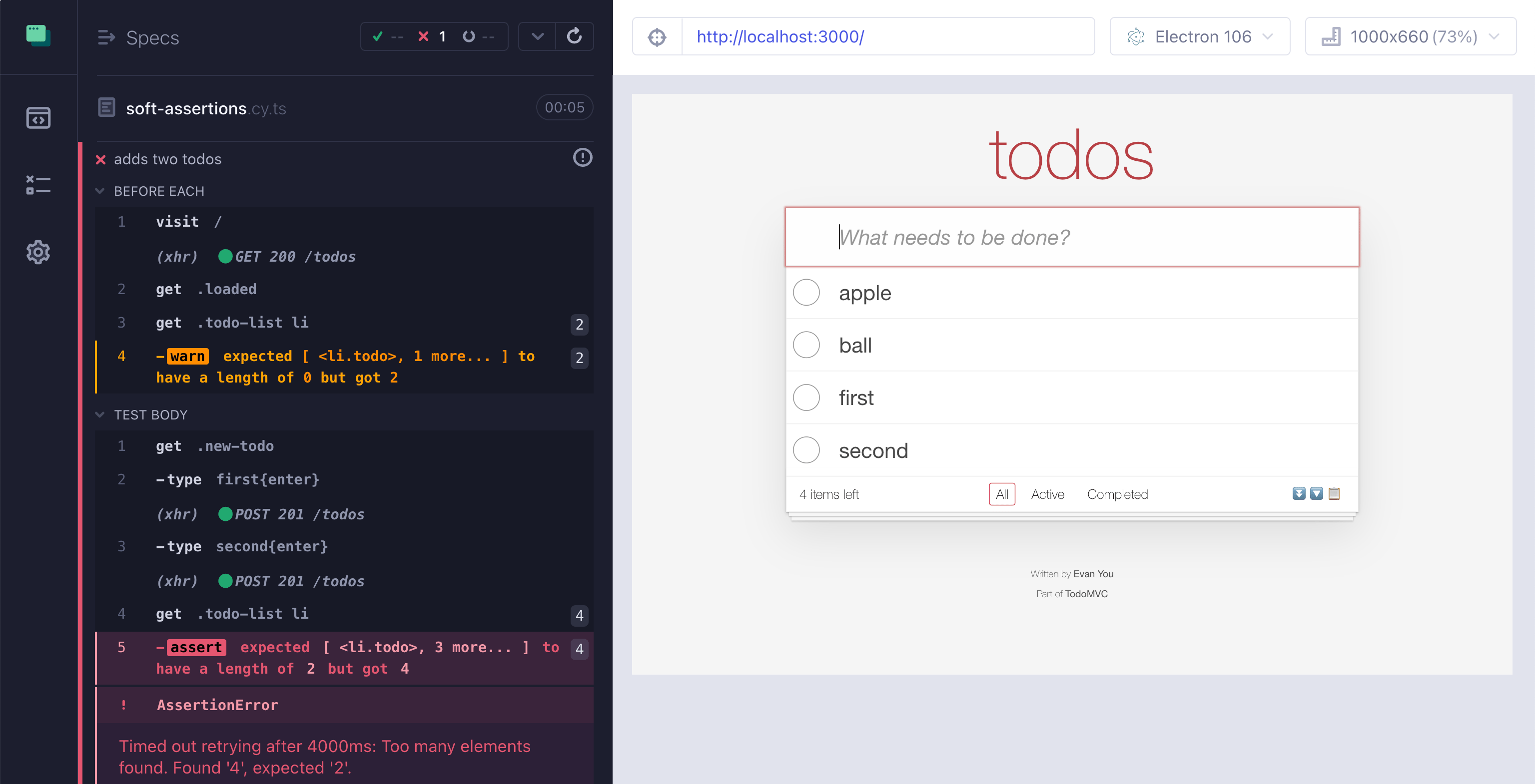

And add a soft assertion cy.get('.todo-list li').better('have.length', 0) to check the number of items shown after the page loads. The soft assertions are exactly liek cy.should('assertion chainers', ...any arguments) with .should replaced with .better

1 | import 'cypress-soft-assertions' |

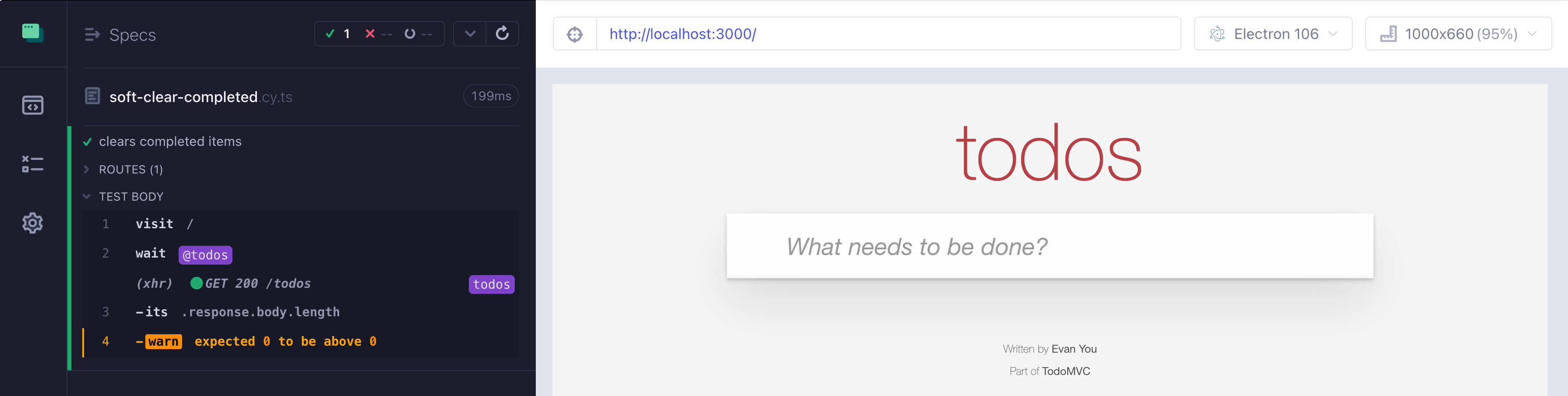

The test still fails, but we now see a warning much earlier. Notice the warn command, which looks like an assertion, but even when it fails, the test still passes.

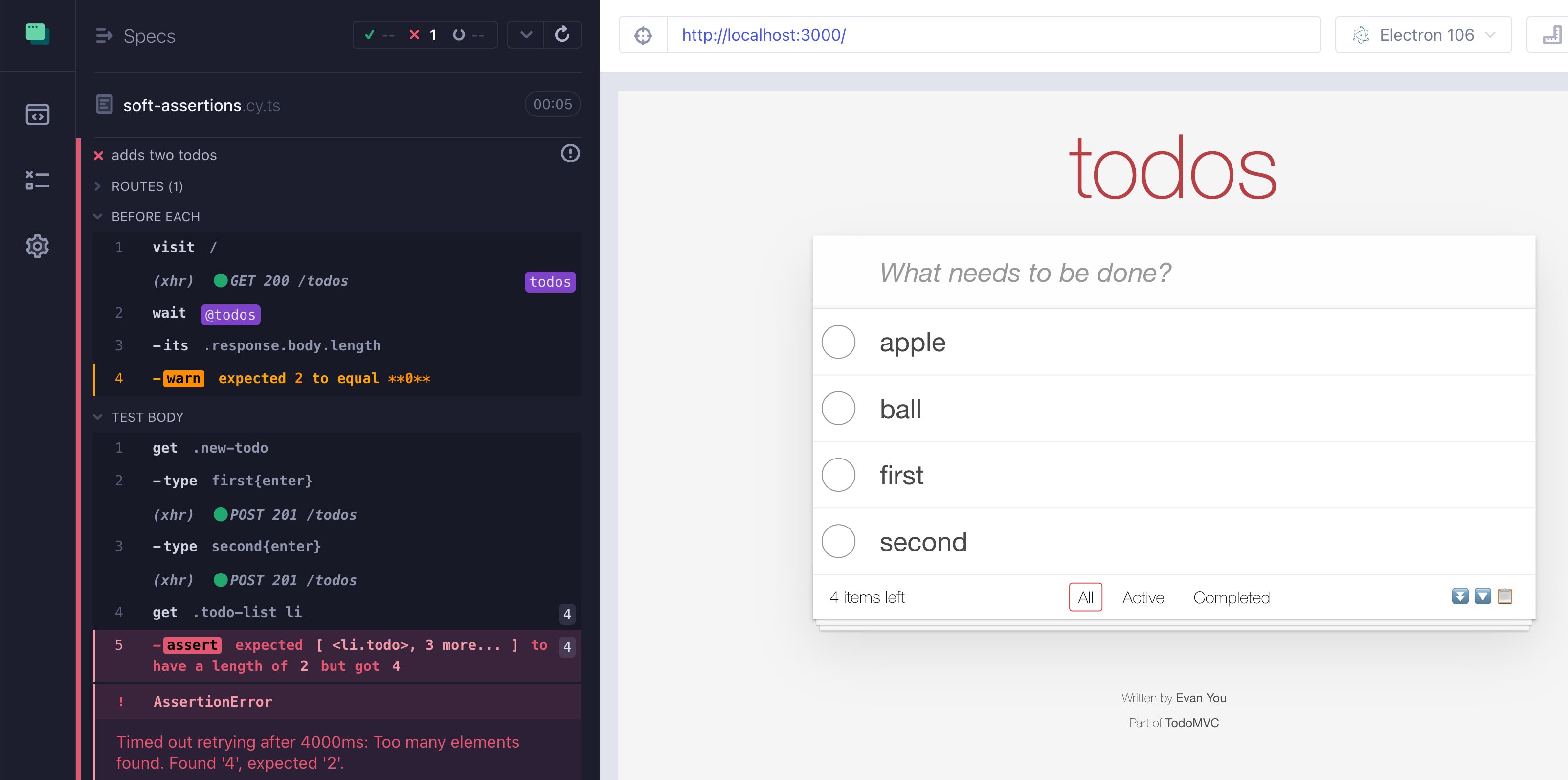

We can move the soft assertion even earlier by spying on the Ajax network call the application makes to load the data. The returned list better have length zero!

1 | import 'cypress-soft-assertions' |

When the test fails, the warning lets us understand why the page is showing the wrong number of items.

Warn about unexpected data

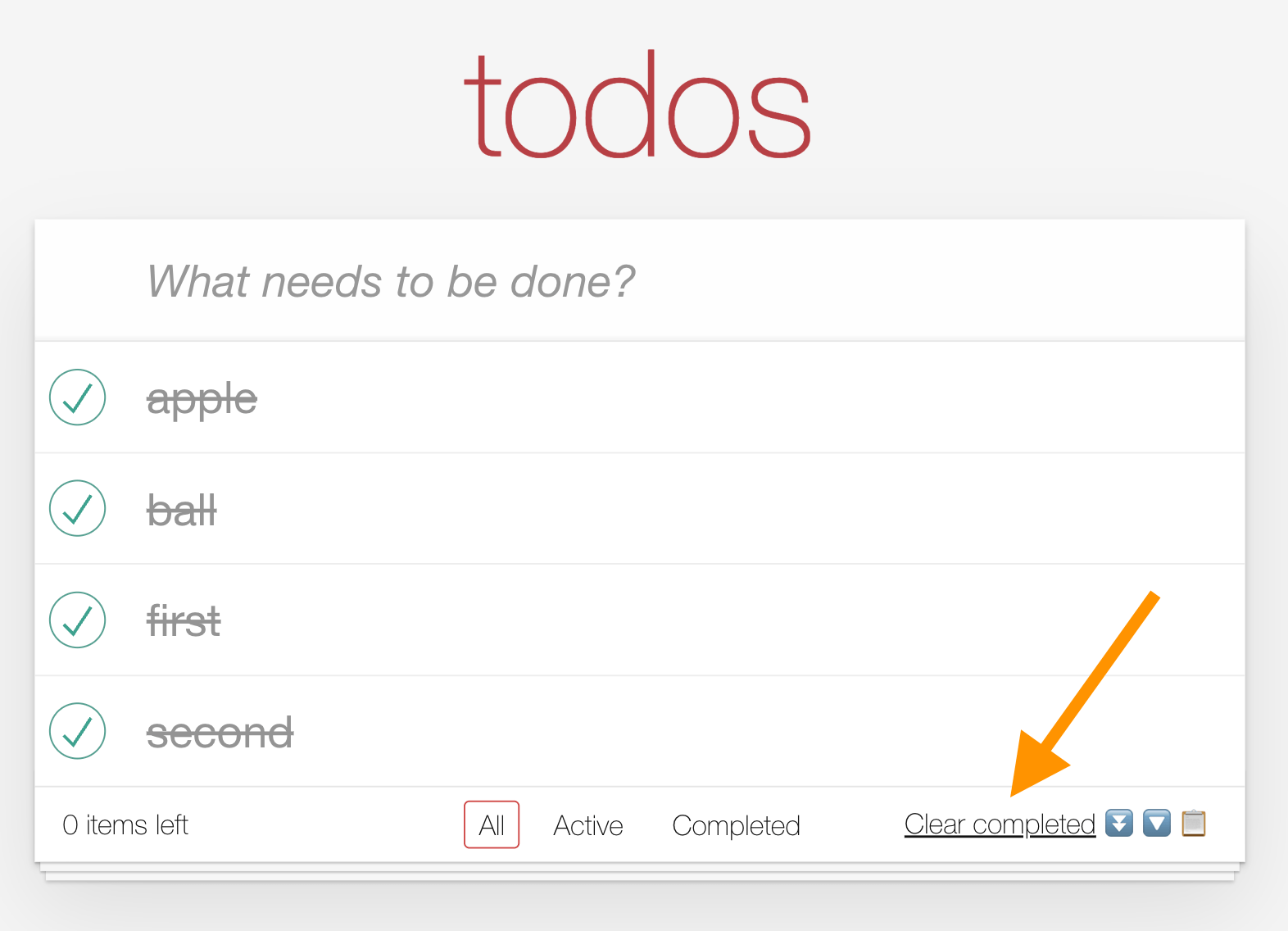

The previous example warned us when the server had unexpected state left by the previous tests. We can also use soft assertions to warn about edge data cases that prevent the test from exercising what it is meant to test. For example, let's write a test that removes all items using "Clear completed" button.

We are lazy, so we do not create any Todo items to clear, instead relying on the server to have some existing items. We are still cautious and handle the case with no items on the server.

1 | it('clears completed items', () => { |

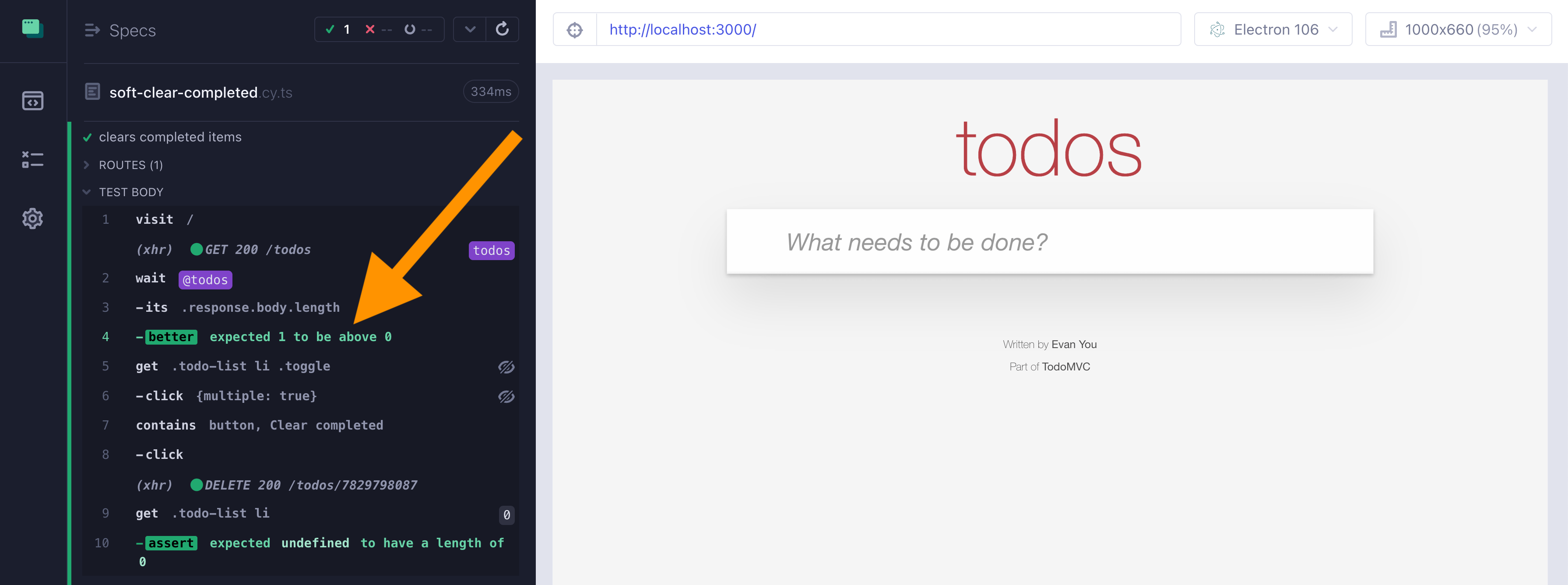

The test runs when we have some existing items.

But if we re-run the test from now on, it will still pass, yet it will not test the Clear completed button!

Should the test fail if there are no items? Or simply warn us that it is NOT doing its part? Using soft assertions we can have a warning.

1 | import 'cypress-soft-assertions' |

If we create even a single todo, the test is all green, no warnings.

Cypress Cloud

I think warnings about the data are going to be 100x more important if the historical data from the Cypress Dashboard Cloud could be used. Imagine the test warning you that all previous recorded test runs on CI had 2 test items, while suddenly the application starts with 100. The test might still pass, yet such data and behavior warnings sprinkled through the test can give you the historical context about when the behavior has changed. The failed test investigation could proceed from "Hmm, why is this button missing?" to "Hmm, the button is missing, ohh, the server returned a string, but it used to return an object, ohh, we now return a stringified object for some reason..."

Warning

The current cypress-soft-assertions plugin is very very very much just a proof of concept. Please do not use it. In fact, I would only use soft assertions if they were a part of the core Test Runner API.