I have never had to manage or really use Redis in my professional life. Other people have set one up for me for session storage or other needs. But recently I needed a quick cache for checking external urls, so I have decided to finally really use Redis.

The goal of Redis NoSQL DB is simple. Given a key and a value (almost any serializable value would work) write a value into the database. You can even set an expiration duration on the key - after certain time the value will be automatically deleted from the Redis database.

1 | // Redis set command |

Using a popular Node Redis client ioredis this looks like this

1 | const url = process.env.REDIS_URL |

Great, so let's setup a shared Redis instance, I don't want to have it work just on my machine!

Setting up remote Redis

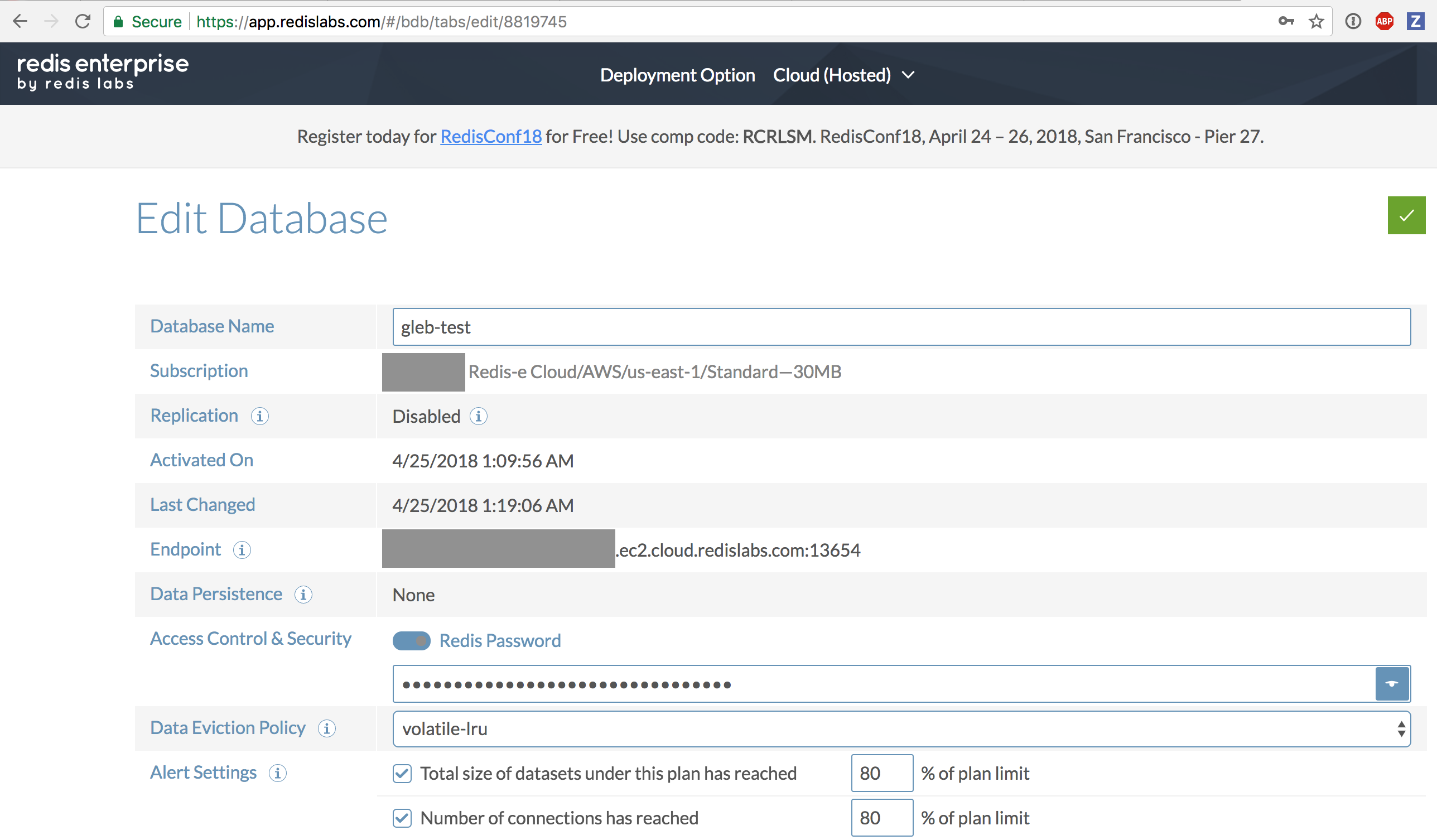

I have set up a free Redis machine at https://redislabs.com. A total of 30MB should be plenty for my needs. To connect I will need the url with the password included.

I will place the full connection url into ~/.as-a/.as-a.ini file like this

1 | [redis-labs] |

Using CLI tool as-a I can quickly run my script with REDIS_URL environment variable (or any other collection of variables)

1 | $ as-a redis-labs node . |

Here my first script that shows values stored and retrieved from a remote Redis server

1 | const url = process.env.REDIS_URL |

We can see the value under key foo only stored for 5 seconds. Comment out the line redis.set('foo', 'bar', 'ex', 5) and run the program again quickly - the string "bar" will be returned. But if we run the program again after 5 seconds, the null will appear. Here is a "normal" run, then run with the line commented out after 4 seconds, then another run after 4 more seconds.

1 | $ as-a redis-labs node . |

The value has expired.

Redis vs memory

To simplify testing, instead of always going through the real Redis instance, I have switched to keyv that allows me to use either in-memory DB or Redis.

1 | npm install --save keyv @keyv/redis |

The Keyv API for set and get is almost the same as "classic" Redis client and enough for my needs.

1 | const Keyv = require('keyv') |

Run this and see the value expire after 5 seconds.

1 | $ node . |

Or against a Redis instance

1 | $ as-a redis-labs node . |

Beautiful, but note that keyv returns undefined and not a null. This might be significant for some use cases, but not for mine.

Do not prevent Node from exiting

By default, an open Redis connection will prevent the Node process from exiting, just like listening to a port prevents the process from terminating. The Redis client exposes client.unref() method. I have forked @keyv/redis and modified its code to expose the actual client in the Keyv constructor. Now the following process just exits.

1 | const Keyv = require('keyv') |

While the pull request 16 stays open, or if it is declined, you can use my fork directly from GitHub.

1 | npm i -S bahmutov/keyv-redis#5850d5999ca897ba832c751c0574d77c7b566034 |

Running the above test program confirms normal process exit

1 | $ as-a redis-labs node . |

Top level async / await

You have noticed that I am using async keyword at the top level of my program. To make this work, I recommend top-level-await. Just load this module from index.js and move "actual" source code into app.js

1 | require('top-level-await') |

1 | const Keyv = require('keyv') |

Using Redis for caching

So now it is time to actually use Redis for a task. Cypress documentation is an open source project that lives at github.com/cypress-io/cypress-documentation. The documentation uses Hexo static generator to transform Markdown into a static site. We have extended Hexo with a few additional helpers. One of them transforms urls into anchor links. Here are a couple of examples, including links the Cypress redirection service on.cypress.io.

1 | // link to an external page |

When generating the static documentation site, we want to validate the links to make sure they are still valid. There is an url helper that does the check.

- if the url has no hash part, then we can check if the request

HEAD <url>is responding with 200 status - if the url does have a hash part like

configuration#Screenshotsthen we need to get the full page and check if there is an element with IDscreenshots

Because urls repeat, caching the checks speeds up the site build a lot - there are almost 1800 urls in the docs as of April 2018! The caching right now uses a plain JavaScript object.

1 | // cache validations |

Great, the code already is using Promises to do its work. Moving it to Keyv is very straightforward. Even better, without REDIS_URL it automatically falls back to in-memory cache which acts same way as using an object.

1 | const Keyv = require('keyv') |

You can see the pull request with my changes. When this gets merged, I can set the REDIS_URL environment variable on our CI that is doing the site build and deployment, and make the cache duration something longer like 1 day. This will ensure that external links are rechecked, yet multiple deploys per day are fast.