I saw legoizer application at ScotlandJs 2015 in Edinburgh. The presentation by Amy Wibowo @sailorhg and Matt Baker @reissbaker showed a hackathon project they implemented: drop any image into the website to convert into a valid Lego combination of flat pieces.

You can try the conversion yourself at the original website http://sailorhg.github.io/legoizer/ using this image.

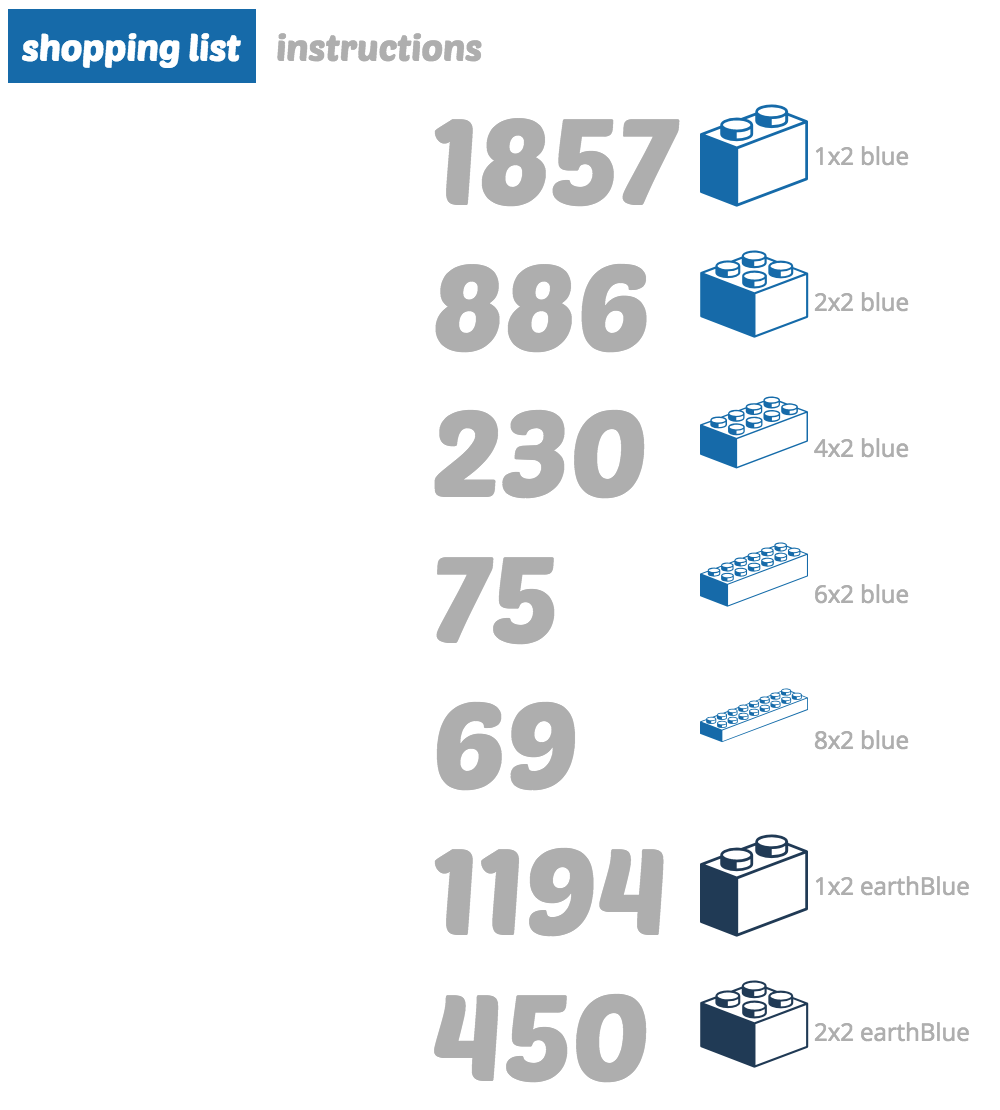

After the conversion is done, the app even gives you the list of Lego pieces to buy. Not surprisingly, the "Starry Night" will need a lot of blue pieces (this is just the start of the list)

I thought the conversion was really cool but a little bit slow. Even for a small image (the above "Starry Night" image is only 600 by 500 pixels) the website pauses for a second or two. Why can't the conversion process spit the result quickly? I decided to investigate in what I call "drive-by optimization".

The original code

The original code is not on github yet, but the unminified browserified code is available at the original website and can be used to investigate the performance issues. The code contains two main bundles: index.js and worker.js. Both were built from the same source files, thus they have common source parts. For our purpose the algorithm only has several steps.

- Send the image to a web worker

- The webworker will figure out for each pixel the closest Lego color (out of 24 widely available colors you can buy in bulk.)

- The web worker sends the converted pixels back to the main thread.

- The main thread figures out how to position blocks to cover the specific colors.

The standard 24 colors you can buy in bulk are simple:

1 | var palette = { |

In the first step of the algorithm, we need to find for each pixel from the given image the closest Lego color. The team used perceptual difference metric CIEDE2000. This metric finds a Lego color that "looks" close to any given color. While accurate, the difference formula is very computationally expensive, involving multiple trigonometric functions and square roots. For example, the following code forms the last few lines of the implementation

1 | var T = 1-0.17*cos(radians(a_hp-30))+0.24*cos(radians(2*a_hp))+ |

I believe the team took the color difference code from markusn/color-diff repo.

To me, this part looked most expensive, but I needed to prove it by profiling the code first.

Profiling code

I saved the entire legoizer to a local drive and started hacking.

First I added timestamps and profile collection to the index.js file and measured

end to end color conversion.

1 | function processImage(err, image) { |

I placed the console.time and console.timeEnd around all image processing steps, including

converting from / to the JSON format.

I used Chrome Canary 44.0.2399.0 to measure how long the the code runs in a clean environment without any additional

extensions running. The initial code took 2361ms to execute end to end. I also added timestamps to the

worker.js to measure how long the image conversion takes without the image transfer costs between the

main thread and the web worker (and back)

1 | function convert(e) { |

The timestamp showed that the function pixelMap ran for 1342ms - 50% of the total!

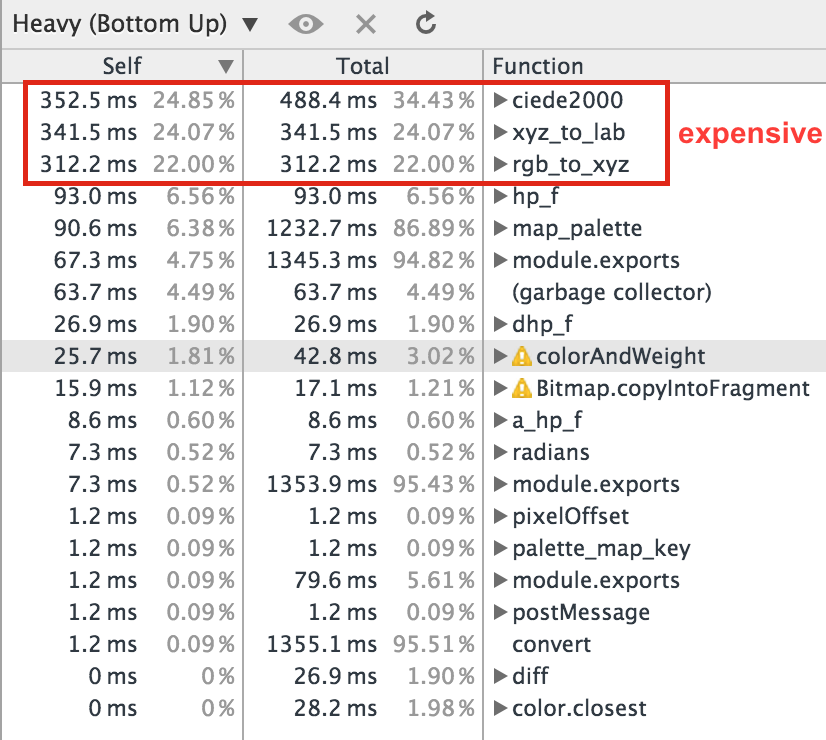

This seemed like a good candidate for further profiling. I added console.profile and console.profileEnd

next to console.time calls. The same run generated a detailed CPU profile chart. The longest running

functions are on the top of the list

The function that calls most of the longest running utilities is the following

1 | module.exports = function(bitmap, mapper, xUnit, yUnit) { |

This literally goes through each pixel, calling mapper function and saving the result.

The top 3 bottlenecks were ciede2000, xyz_to_lab and rgb_to_xyz functions, taking roughly the same amount

of time. Usually I advise to concentrate on the top bottleneck only, but in this case any one would do.

Eliminate some conversions

At some point the algorithm needed to compare two colors. Turns out the code to find the difference was really weird

1 | color.closest = function(target, relative) { |

The target was a single RGB pixel, while relative is an array of 24 RGB Lego colors.

The weird part was making an array with single color [target] and then iterating

over two arrays to find closest pairings inside color.map_palette function

1 | function map_palette(a, b, type) |

Since we always find closest color for a single RGB value, we can eliminate one iteration easily.

Also, we are interested in the closest color, thus we can remove the if (type === 'farthest') branch.

I made the code changes and ran the end to end timing again. The numbers changed to the following,

showing slight improvement

step | pixel map function (ms) | total (ms)

--------------------------------------------------------

initial | 1342 | 2361

removed inner loop | 1236 | 2280

Ok, we are only getting started!

Precompute 24 Lego Lab colors

We are using CIEDE2000 difference algorithm that operates on Lab colors. Thus every RGB triple must be converted to Lab color triple. This is an expensive operation, as you saw in the first profile. It involves first converting from RGB to a special XYZ color space and then to Lab color space.

1 | function rgb_to_lab(c) |

Any time you do powers or in this case cubic roots, there is a huge performance cost. Can we convert from RGB to Lab fewer colors? Turns out yes, and quite easily. I have noticed that we convert the 24 Lego RGB colors in every pixel comparison.

1 | function findClosest(rgb, legoColors) { |

Thus I precomputed the 24 Lego Lab colors right away and just passed them around. Only an individual RGB pixel under the investigation was converted. The performance has indeed improved

step | pixel map function (ms) | total (ms)

--------------------------------------------------------------------

initial | 1342 | 2361

removed inner loop | 1236 | 2280

precompute Lego Lab colors | 950 | 1938

Caching computed colors

We convert a lot of colors when comparing RGB pixels to Lab colors. For my next optimization I have added caching such conversions. This cache is similar to a cache that already existed in the program (storing closest picked Lego color).

1 | function rgb_to_lab(c) |

The impact was very noticeable (but a little suspicious). Unfortunately I did not have time to investigate - it seems the two caches should be pretty aligned, and only one would actually give cache hit. In any case, the color conversion cache has improved the algorithm's speed

step | pixel map function (ms) | total (ms)

--------------------------------------------------------------------

initial | 1342 | 2361

removed inner loop | 1236 | 2280

precompute Lego Lab colors | 950 | 1938

cache RGB -> Lab | 674 | 1679

Passing data to the web worker

Converting an image raster to a JSON string to pass from the main script to a web worker is an expensive operation. We also convert the result when passing the lego colors back from the web worker. One can get a sense how expensive this is by noticing that the total times are around 2x the time it takes to convert the image.

Luckily, modern browsers support Transferable objects. One thread

can pass a reference to an ArrayBuffer (or its subclass) directly. The key observation

is that this buffer is not a shared memory buffer. The caller releases the buffer

and does not have access to it anymore. This might not work for other cases, but

works very well for ours. We can get an instance of UInt8ClampedArray directly

from the canvas object holding the input image.

1 | var canvas = canvasFromImage({ |

Note that we list the transferable objects, but they could be properties in the data object to be sent

1 | // single transferable object pixelData.data.buffer |

Similarly, the web worker can send a transferable object back to avoid the expensive marshalling

1 | var bytes = new Uint8ClampedArray(output.pixels); |

The above approach eliminated huge object transfer overhead, aligning the total end to end time with the image color conversion time.

step | pixel map function (ms) | total (ms)

--------------------------------------------------------------------

initial | 1342 | 2361

remove inner loop | 1236 | 2280

precompute Lego Lab colors | 950 | 1938

cache RGB -> Lab | 674 | 1679

transferable objects | 647 | 699

Splitting work across multiple web workers

The pixels from the input image are processed independently of each other. This points to the last performance optimization: split the entire image conversion to multiple web workers executing in parallel. I chose to split the image horizontally into N regions. For example, here are color coded regions if N = 4.

The code send just a region of the original image to the web worker is very straight forward

1 | var N = 4; |

Each web worker sends the mapped pixels back and we can draw them back into the original canvas

1 | // keeps track of finished workers |

The performance gains when splitting into 4 regions were 3x (there is always some overhead when running multiple tasks in parallel)

step | pixel map function (ms) | total (ms)

--------------------------------------------------------------------

initial | 1342 | 2361

remove inner loop | 1236 | 2280

precompute Lego Lab colors | 950 | 1938

cache RGB -> Lab | 674 | 1679

transferable objects | 647 | 699

4 web workers | 225 | 250

I tried varying the number of web workers N, but the impact was minimal. Seems there is no point in splitting the work to more than the number of CPU cores (MacBook Pro with 4 cores).

Conclusion

The optimizations I used in this example are very common techniques and are not domain specific

- do not compute same information multiple times (cache results)

- use modern data passing techniques to avoid expensive data marshalling (transferable objects)

- perform independent chunks of work in separate threads (multiple web workers)

The legoizer speed up, while slower than real time 60 frames per second, is almost 10x compared to the original code. Further optimization could be achieved by scaling down the image even more (the original code scales any dropped image to the maximum 200px dimension).

In my opinion even the current speed up almost allows two interesting applications built upon the original idea

- convert a gif / movie into Lego-style movie

- real time video Lego filter for WebRTC communication

For me personally, the drive by optimizations are an excellent training ground. The lessons learned here will be applied to my day to day work.

I will make my git repo public as soon as the original code is made public (aside from the finished web app).

- Optimizing Nth is another example of a drive by optimization of 3rd party library

- my other performance blog posts