Please read Getting started with Http/2 and server push for the base for this example.

Main links

The problem with HTTP 1.1

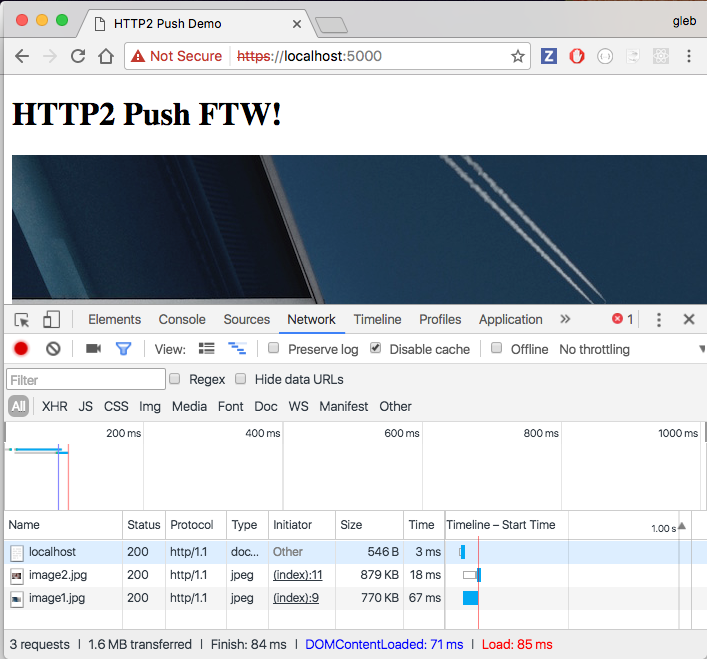

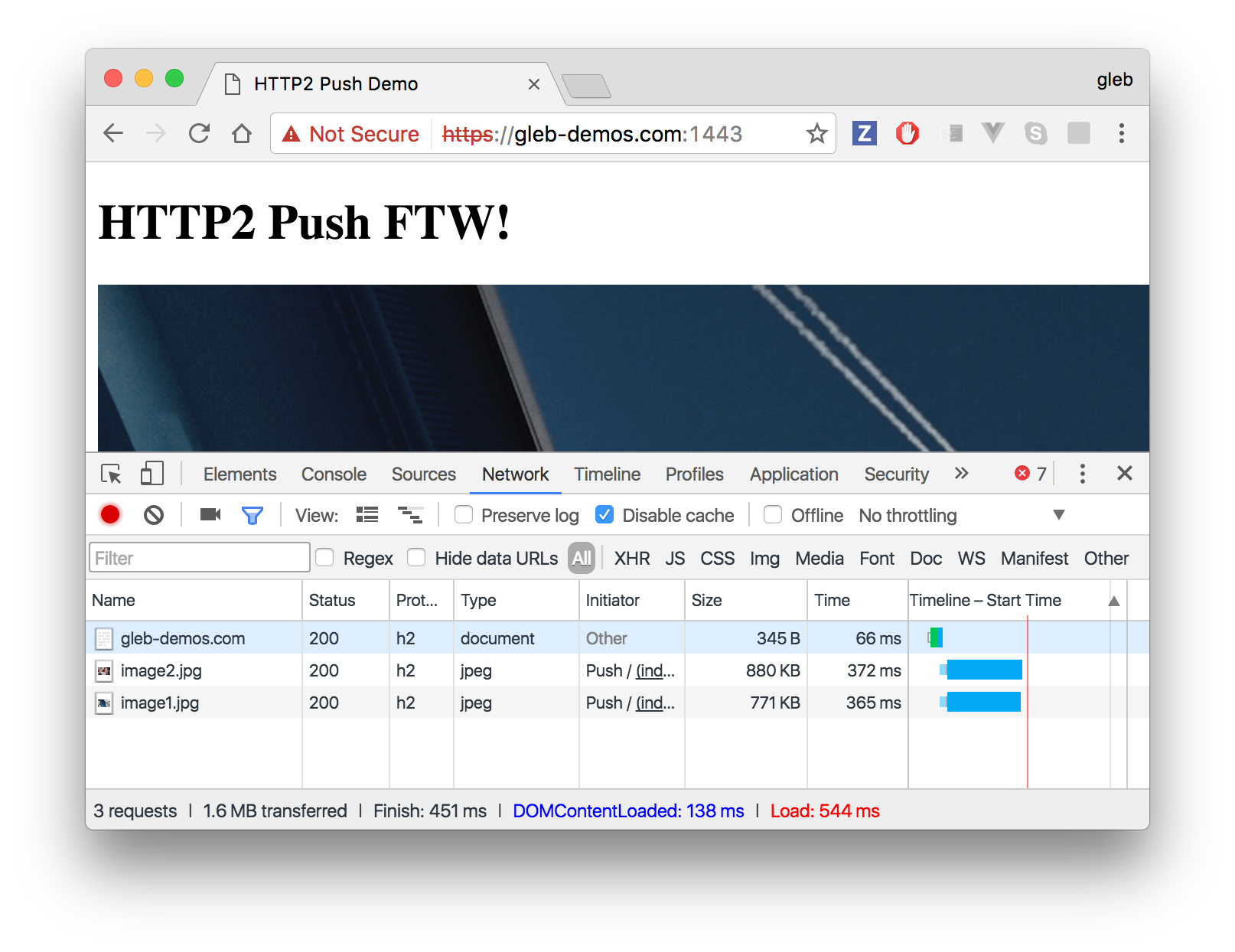

The current text HTTP standard breaks when your website is serving many resources. First, the HTML page is fetched, then the browser requests other resources linked from the page. Each request opens a new connection (involves TLS and HTTP handshakes) which is expensive. The typical waterfall is well-known to the web developers; the resources stack down and to the right in the DevTools Network panel.

For example, here is a local HTTPS server (with self-signed certificate),

serving a page which includes two images from folder public.

1 | const server = require('https') |

We can connect to the server https://localhost:5000 in Chrome (I am using

version 55) and observe the download times. Not too bad, because the network

only has to perform local download, yet we the images only start downloading

after the page has finished.

Of course, the browsers try to anticipate the future download, and might prefetch the images immediately. The ultimate logic lies with the page though.

Can we do something different? When the server is serving the index page from

public/index.html, the page knows that the user will ask for two images

next. If only we could put more logic into the server and immediately

push these images to the client browser, we could speed things up.

Enter HTTP/2

HTTP/2 is a major performance-related reworking of the existing HTTP protocol. A single connection can multiplex multiple binary resources, greatly improving the overall web browsing performance.

In our case, we can use take advantage of HTTP/2 feature called "Server push"

to transfer two binary images to the client in the same connection stream

with index.html. Here is the code, which takes advantage of a Nodejs

module called spdy. Despite its name,

spdy implements HTTP2 protocol support too. There are no changes to move

from Node https module to spdy to take advantage of HTTP/2.

Just a different module name.

1 | const server = require('spdy') |

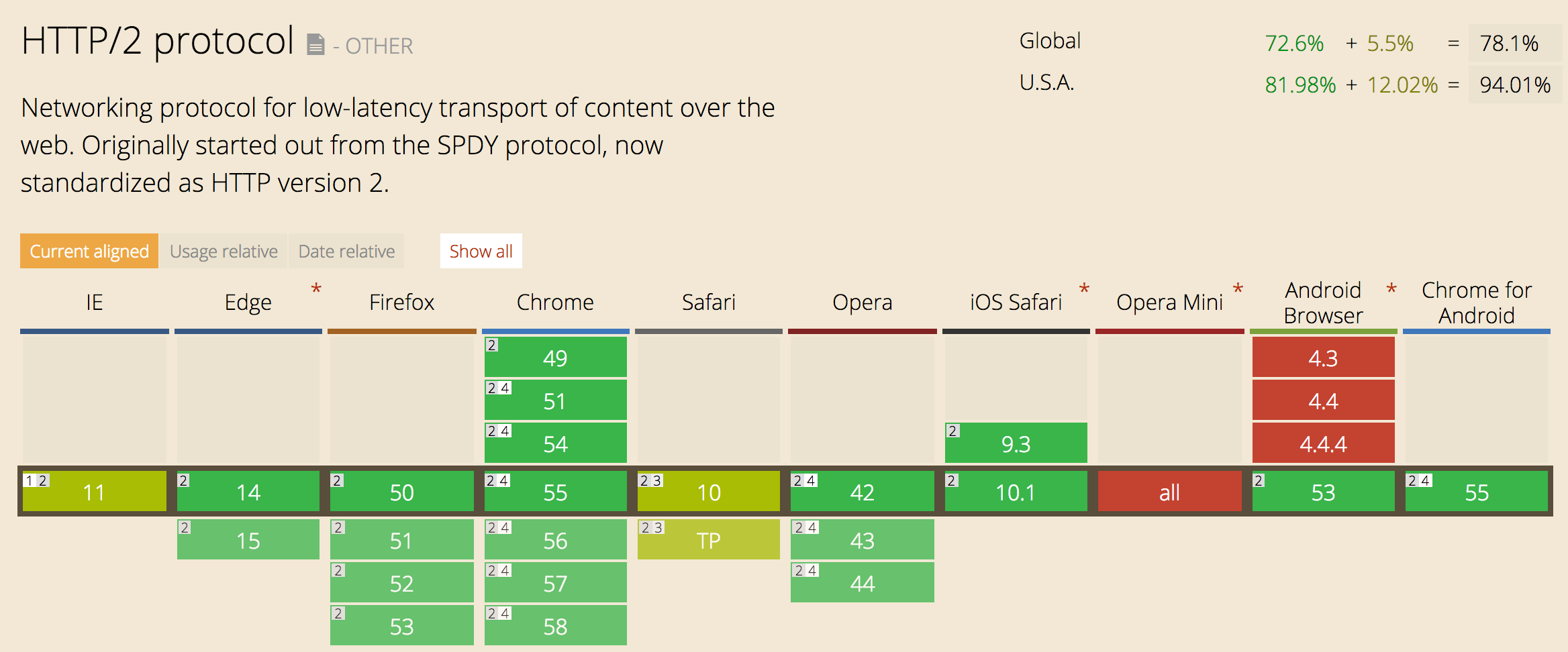

The Chrome browser (and other modern browsers too!) should have no problem connecting and receiving information over HTTP2 protocol. The new protocol has been widely adapted already, see http://caniuse.com/#feat=http2

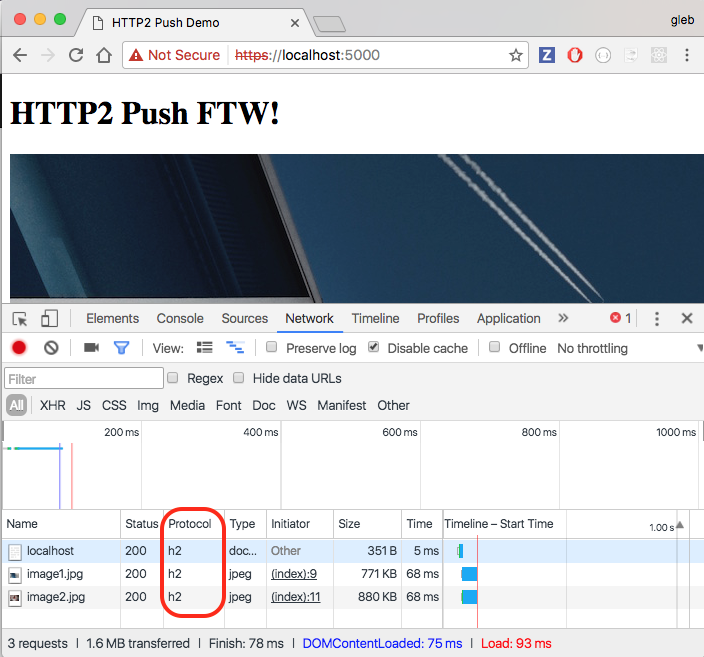

In the DevTools, you might want to enable "Protocol" column to see the "h2" text.

Yet, there is no performance benefits yet. The server has to implement that

"Server Push" feature that sends images when serving the index.html

in the same response.

Server push

We need to serve the index.html page differently, and we can detect if the

server push is available or not.

1 | app.get('/', serveHome) |

If the client browser supports HTTP/2 (which spdy determines for us by

inspecting connection headers), we should push the loaded file contents.

This is very simple, and can be factored into a clean function

1 | const image1 = fs.readFileSync('./public/images/image1.jpg') |

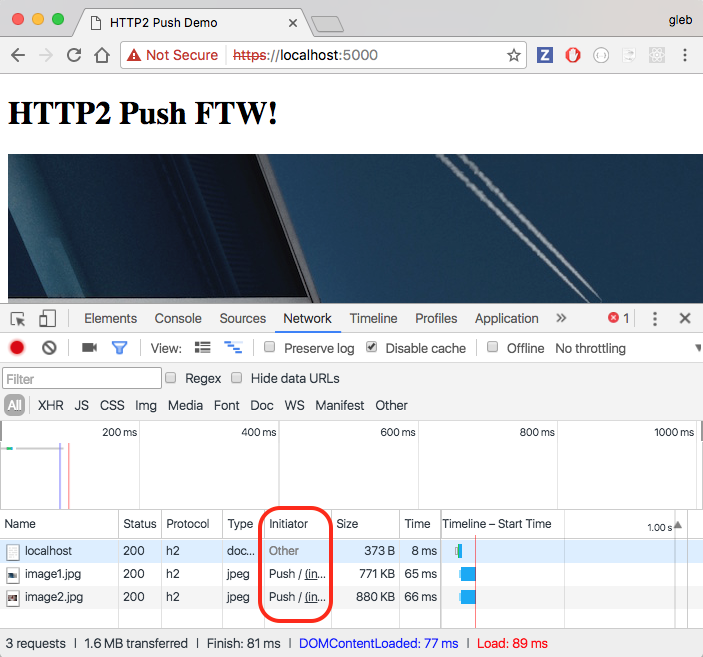

The above function serveHome preemptively pushes two files that the

client "might" request. The server push will only match requests

with the given url and request headers.

The file contents has been preloaded (or it could be piped as a stream), and we open a stream from the response object. Under the hood, the new stream will be multiplexed in the response stream. This can be a performance improvement, yet in my experiments it was not very obvious.

Deployment

I hope to get a sense how hard it is to get the Server Push working in more realistic "production" environment.

Let us deploy our application to Dokku which I run on a small DigitalOcean droplet. You might want to read my other blog post if you have never dealt with Dokku before. I will push a local branch called "push" to Dokku to be the remote "master" branch.

1 | $ git push dokku push:master |

The deployment goes through and the logs show the server has started. On Dokku:

1 | dokku:~# dokku logs http2-push-example |

The Node app is even serving the index page if we grab the internal IP of the server process.

1 | dokku:~# cat /home/dokku/http2-push-example/nginx.conf |

Now make the request to 172.17.0.9:5000, but remember that we have

a self-signed certificate.

1 | dokku:~# curl https://172.17.0.9:5000 --insecure |

Yet, when we try to fetch the page from the external address, we get 502 error (I already got TLS certificate using Dokku LetsEncrypt plugin).

1 | $ http https://http2-push-example.gleb-demos.com |

Dokku runs two processes for each application. There is our server and there is an Nginx proxy dealing with domain, virtual hosts, etc. The Nginx proxy has trouble accepting our self-signed certificate AND cannot proxy correctly HTTP/2 connections with Server Push (see first this issue and then the support announcement). Proxying HTTP/2 presents a very different challenge from proxying HTTP 1.1 - the connection is long lived and binary.

For now we can disable the Nginx proxy and let the server respond directly.

1 | dokku:~# dokku proxy:disable http2-push-example |

Without setting up virtual host we can access the application using a random port above.

1 | $ curl https://gleb-demos.com:32768 --insecure |

We can check if the server supports HTTP/2 (of course it should) using is-http2-cli tool

1 | $ npm i -g is-http2-cli |

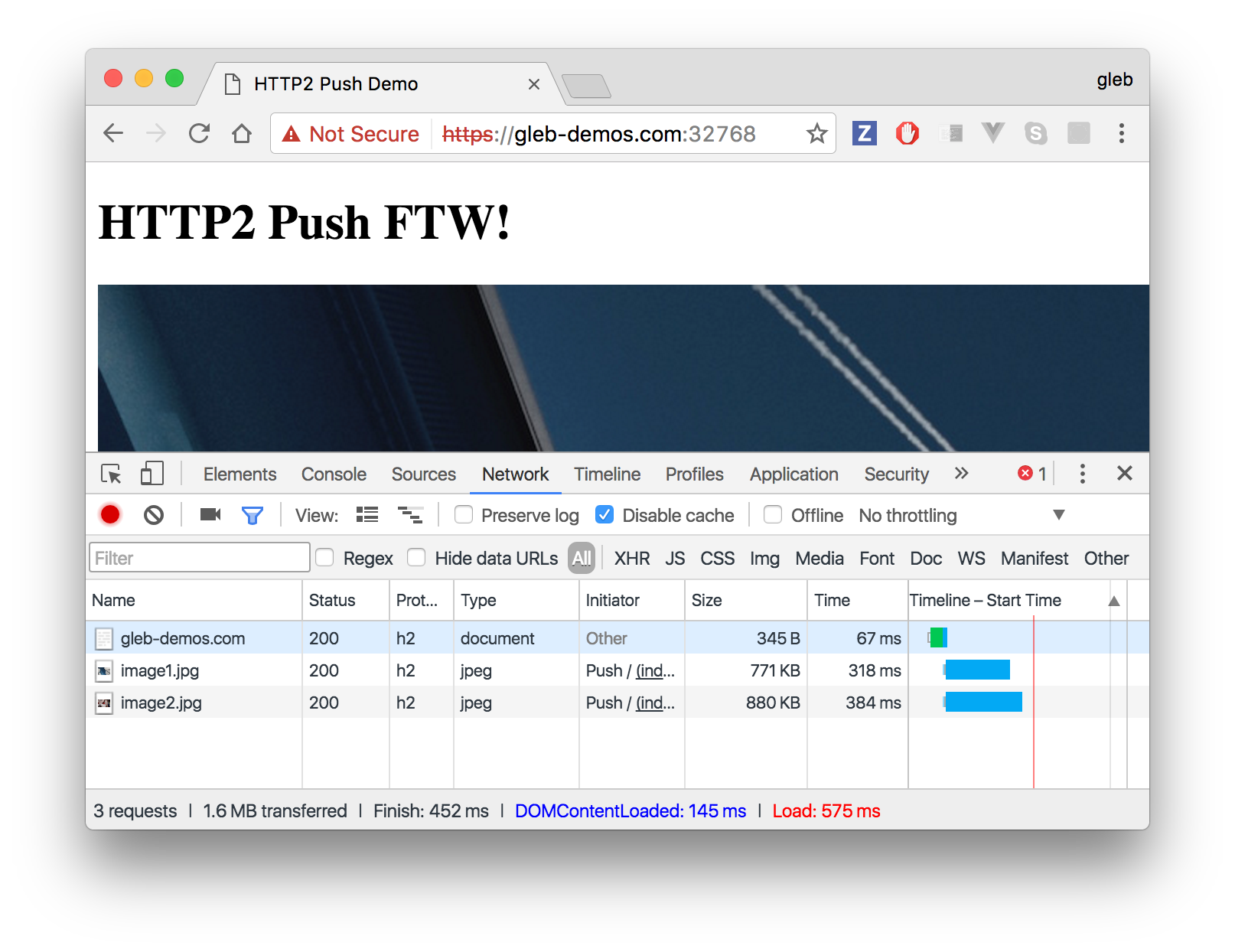

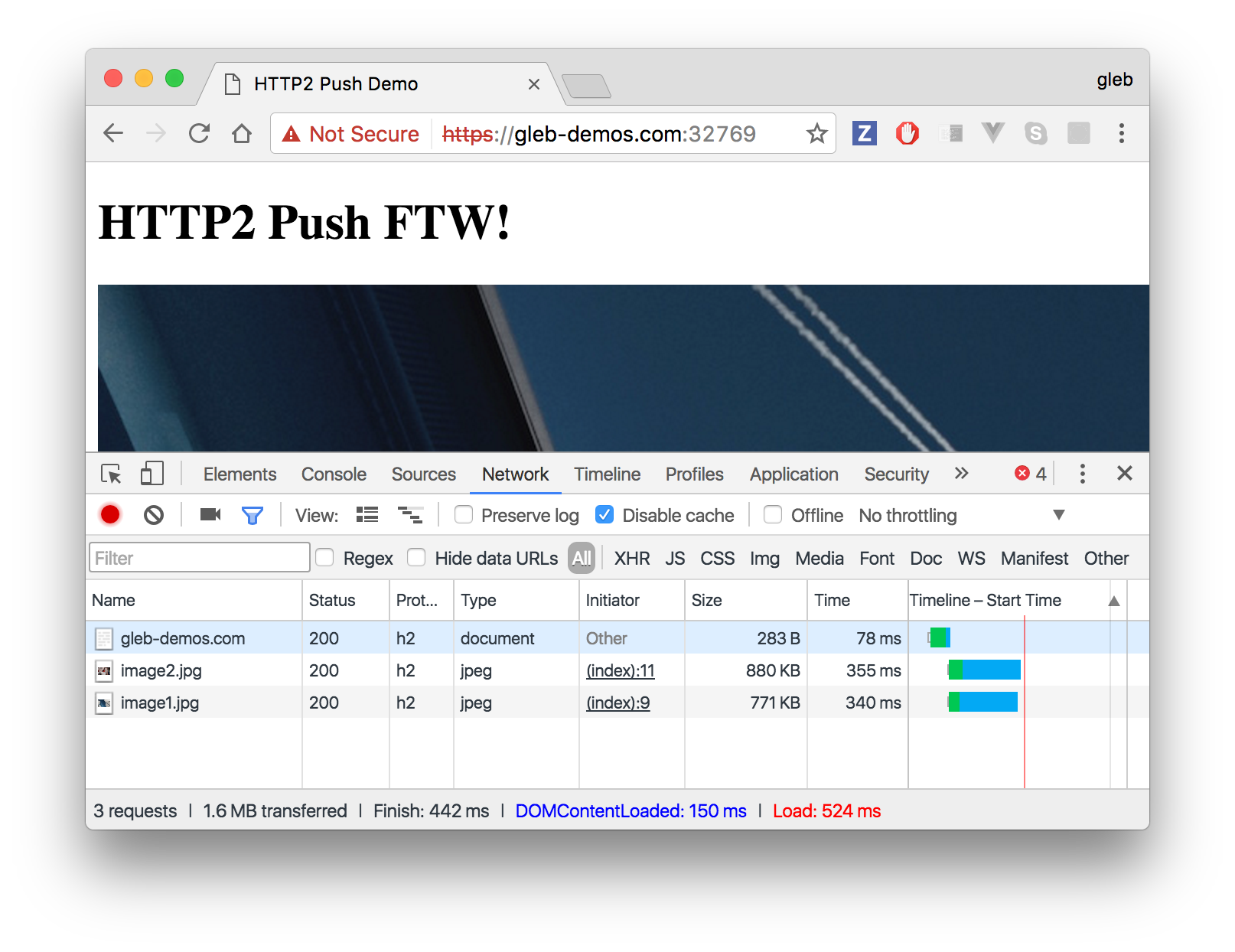

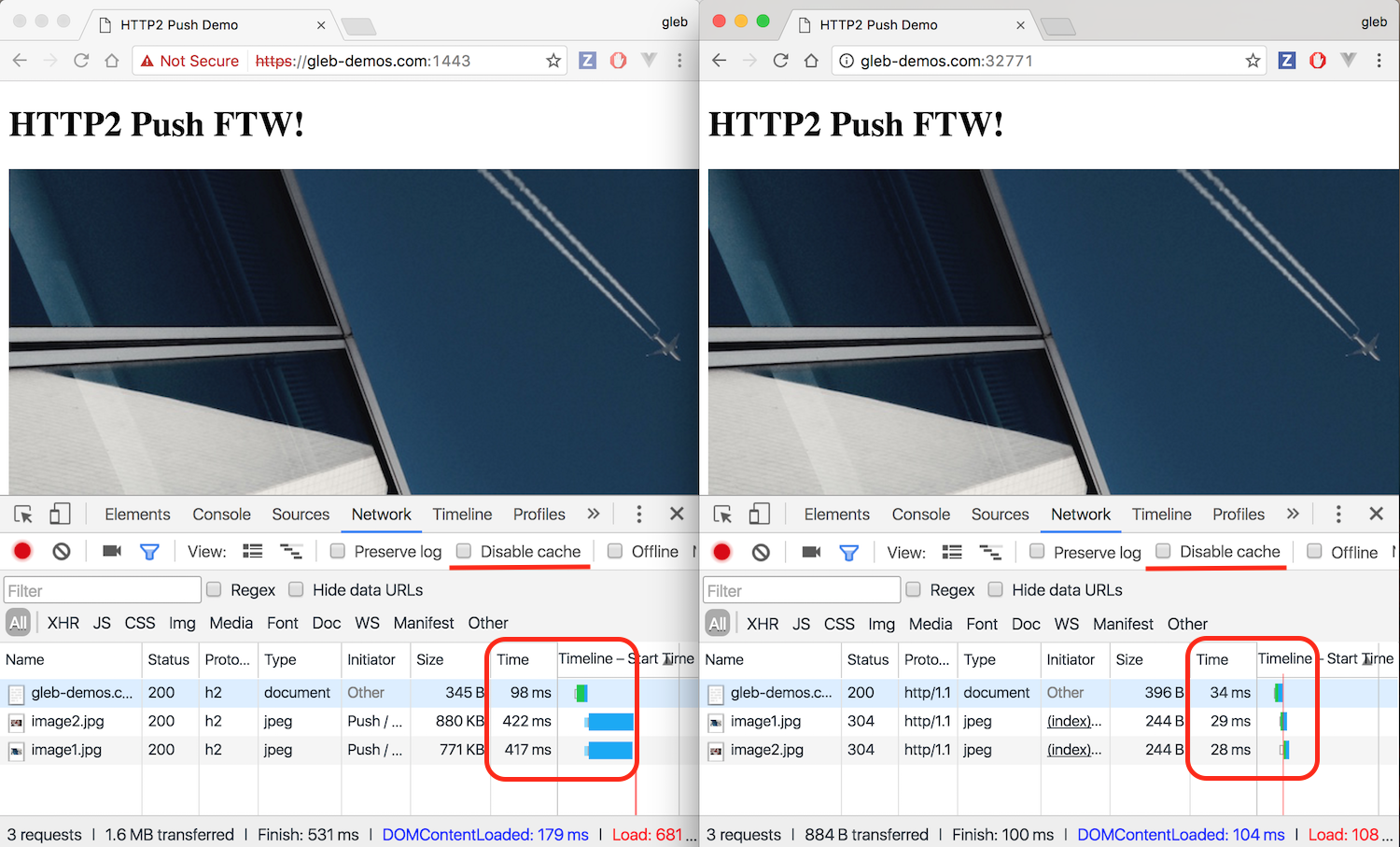

Opening the page in the browser shows the following network timings

Same setup, but I have disabled server push in code

1 | const homePageWithPush = files => { |

Hmm, there is no performance gain at all! What is going on?

You can see the HTTP/2 without server push at https://http2.gleb-demos.com/.

Running behind proxy

Ordinarily the Nodejs program does not handle the immediate connection from the client. A dedicated "hardened" proxy like Nginx is used to receive the TLS connection, then forward the request over regular unsecured channel to the Node server.

Nginx does not support HTTP/2 with Server Push forwarding, thus we need a different proxy. The author of spdy Fedor Indutny wrote Bud - a TLS terminator that understands HTTP/2. Big thanks for both, Fedor!

We can install Bud locally to try it out. While Bud is written in C, it is distributed via NPM, and compiles as a native module on Mac.

1 | $ npm install -g bud |

We need to generate a config file - there are a lot of settings!

1 | $ bud --default-config > bud.default.json |

The generated bud.default.json has lots of settings, but for our purposes we just need to update only two parameters. First, we need to set the destination for the proxy - the backend address. In our case it is local IP and port 5000 by default.

1 | { |

We also need to tell the proxy (the "frontend" connection) to forward HTTP/2 connections to the backend. We wiil add the "h2" protocol to the list of handshakes to be sent to the backend.

1 | { |

Let us start the Bud proxy

1 | $ bud -c bud.config.json |

Then start our server. Since we no longer require TLS in the Node server,

we remove the certificates and create server with spdy module with a

configuration that allows plain non-ssl connections.

1 | // no certificates! |

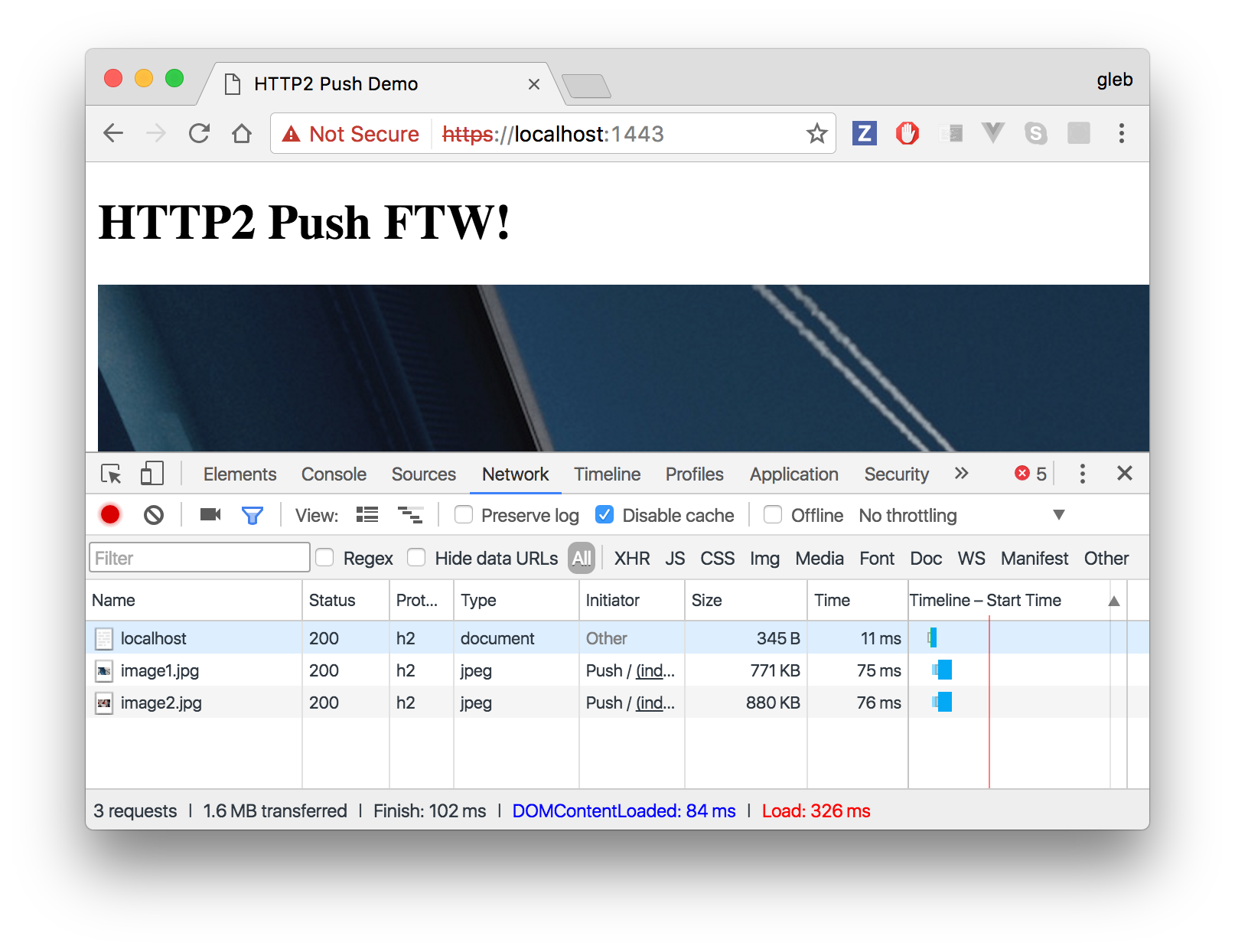

Both proxy and our server are running and the client can connect and have HTTP/2 server push.

Bud on Dokku

Now we can push the server code to Dokku. We can also run Bud as a good

proxy there to replace Nginx for this particular application.

Because we have disabled Nginx to find the

the port the Node process is listening at, just run docker ps

1 | docker ps |

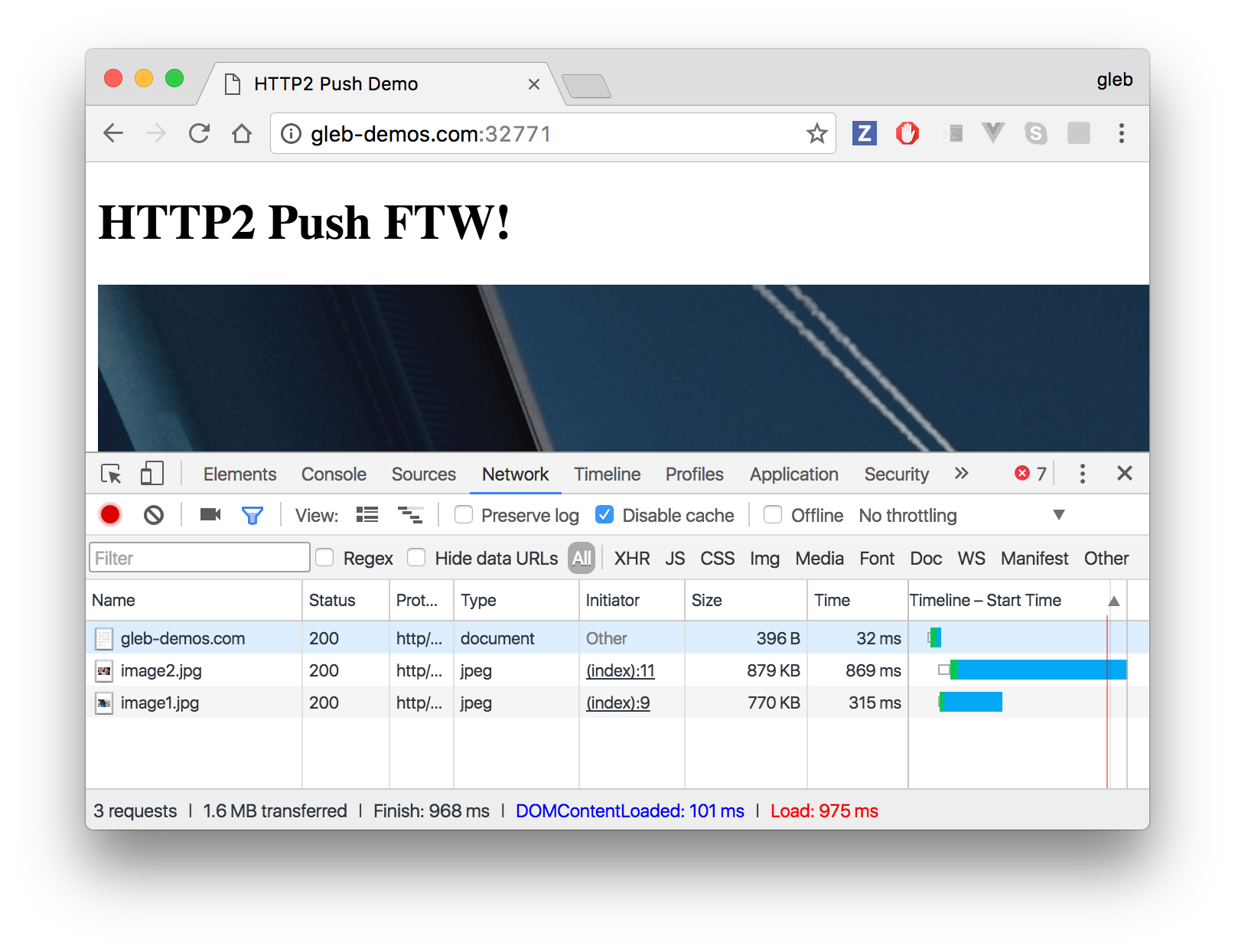

The Node process is running at the port 32771 after deployment.

We can test by going to the insecure connection http://gleb-demos.com:32771

Let us secure the connection and allow HTTP/2 connections.

Put bud.config.json somewhere on Dokku and edit the backend address.

1 | { |

Start Bud process and keep it running when the SSH connection is terminated.

1 | nohup bud --config bud.config.json & |

Now connect through self-signed certificate to https://gleb-demos.com:1443/

In general I found HTTP/2 connection with Server push to be slightly faster than HTTP 1.1 page loads. Yet there is no consistent data I could collect to prove it.

We should test the load on our server using HTTP2 aware tool, like h2load, but compiling this tool requires too much effort :(

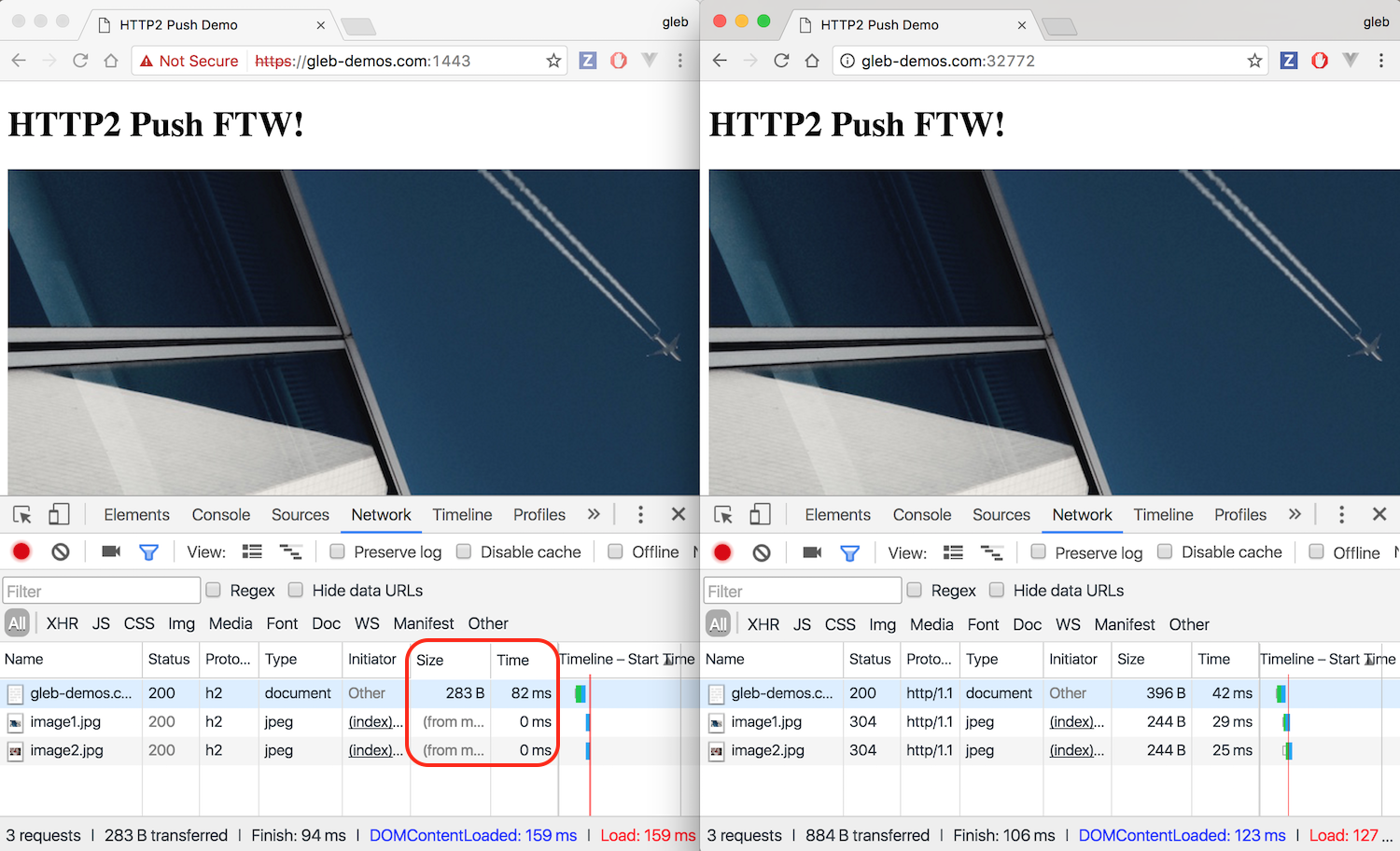

Browser caching is the real performance winner

The main performance benefit when downloading large resources comes not from optimizing the network or delivery, but from NOT downloading the same resource multiple times. In our implementation, Server Push always pushed the resources without telling the browser that these resources might be already in the browser's cache. Compare the page load performance when the development setting "Disable cache" is turned off. HTTP 1.1 beats server-push handily!

We need to allow browser to cache the pushed resources. Let us add the appropriate headers to the response.

1 | const imageOptions = { |

Redeploying the server to Dokku and the images are now appearing instantly

(they are part of the index.html page response). Note, that this changed

the application port to 32772.

Much better.

Thoughts

I expected more from Server Push to be honest. Due to some flaky demo, I once got side by side data where the HTTP/2 version was loading images in 1/3 of the time it took HTTP 1.1 to load and got super excited. Yet I could not repeat these numbers!

This is another reminder to be vigilant against drawing conclusions before the data can be confirmed.