I love Mocha and usually recommend it as a very easy to learn and flexible unit testing framework. Lately, I have created two wrappers that extend Mocha with additional useful features: test order randomization and running previously failed unit tests first. I released these tools as NPM packages rocha and focha.

Randomize test order

Look at the spec file below. It passes in Mocha (and other BDD test runners), yet has a huge defect

1 | describe('example', function () { |

The tests are interdependent: test 3 only passes if test 1 runs before it. Whenever you modify the code and want to execute just test 3 for example, it will fail

1 | it.only('runs test 3', function () { |

It is easy to introduce dependencies among tests especially if you do not adhere to functional programming. The dependencies lie dormant, ready to cause problems whenever you least expect them.

A good way to flash out the interdependencies among unit tests is to

scramble the order of tests before running them. This is exactly

what rocha does. After loading all spec files, the order of

tests inside each describe block is randomly changed. If the tests

pass - perfect. The order will be changed randomly again on the next

run. Eventually, an ordering will happen than due to interdependencies

will cause unit tests to fail. In that case rocha will

save the failing test order in a JSON file. On next run, the same

order will be used, recreating the failing run. This will give you,

the developer, an opportunity to repeatedly execute the same order,

finding how the unit tests are dependent and separate them.

1 | $(npm bin)/rocha spec.js |

The above test order will be used by rocha as long as the tests

fail. When you fix the data problem and make the tests idempotent,

the order will be randomized at every run.

Remember: Random Mocha = rocha

Run failing tests first

As your JavaScript project grows, the number of unit tests is increasing. Soon, the CI takes minutes or tens of minutes to finish all of them. What happens when 1 or several unit tests fail? Well, you try to fix the tests, push the committed code and wait (and wait, and wait) for CI to rerun all tests before seeing if the failed tests start passing.

I usually suggest splitting the project if the tests take too long to execute; but sometimes that's not possible due to code and team organization. In these cases focha could be a good solution. A wrapper around mocha, it saves the list of failed unit tests (if any tests fail). Then, on the next run, these failing tests will be executed first, giving you a very quick feedback if your code changes solved the problem.

For example, the following spec file has the last test failing.

1 | const passes = () => {} |

The package.json has a test script that calls focha once to run

just the failing tests (if any), and a second call that runs all

tests after that

1 | { |

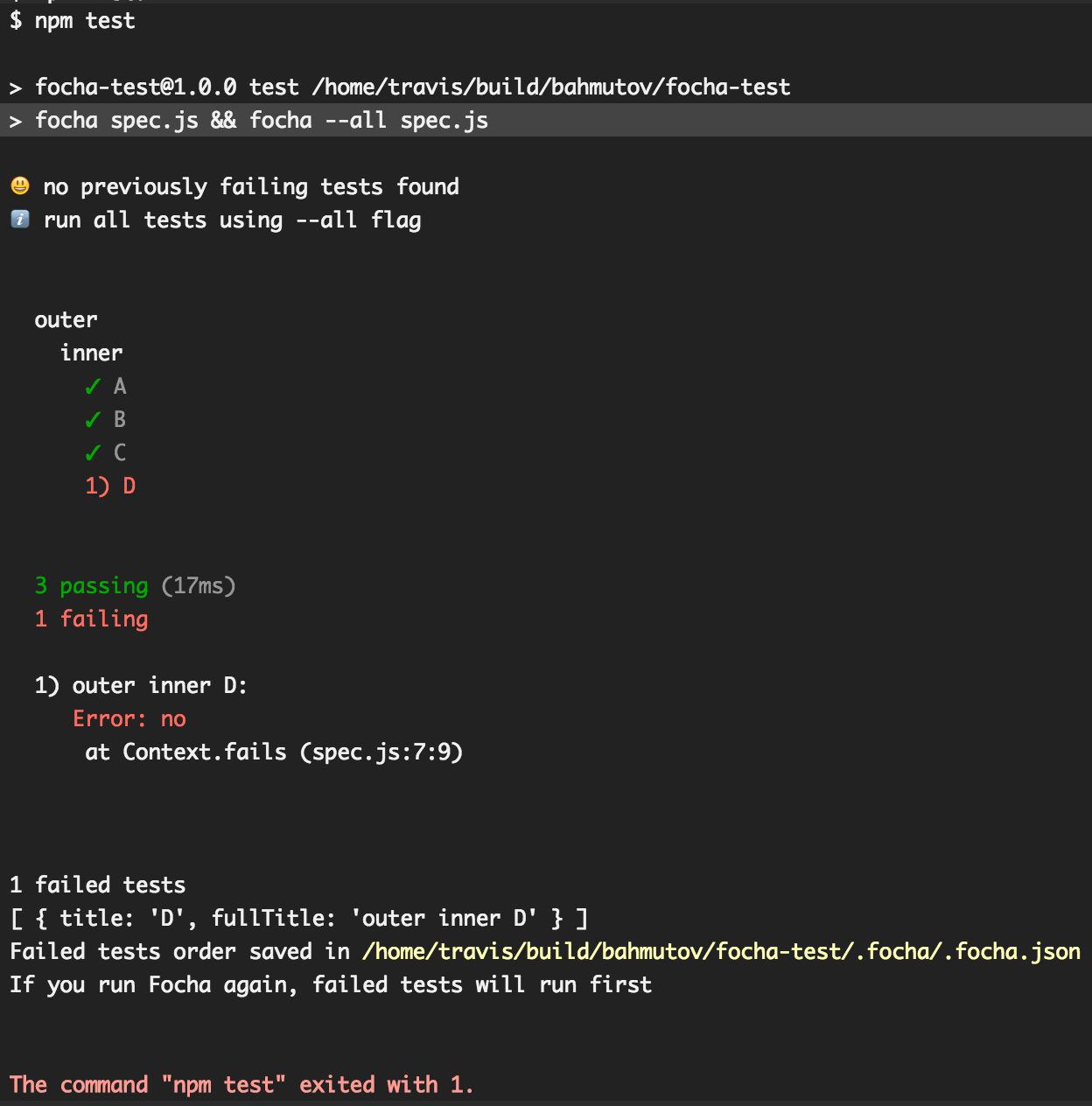

The TravisCI runs these tests and fails

When the test fails, the failing test names are saved into a file

.focha/.focha.json. TravisCI, just like other CIs allows saving

workspace files to be loaded for next build job;

this is how node_modules

folder can be cached to speed up the build for example. We can

save the .focha folder after running the tests, and restore it

before running them. Here is the .travis.yml

file we are using in the demo project.

1 | language: node_js |

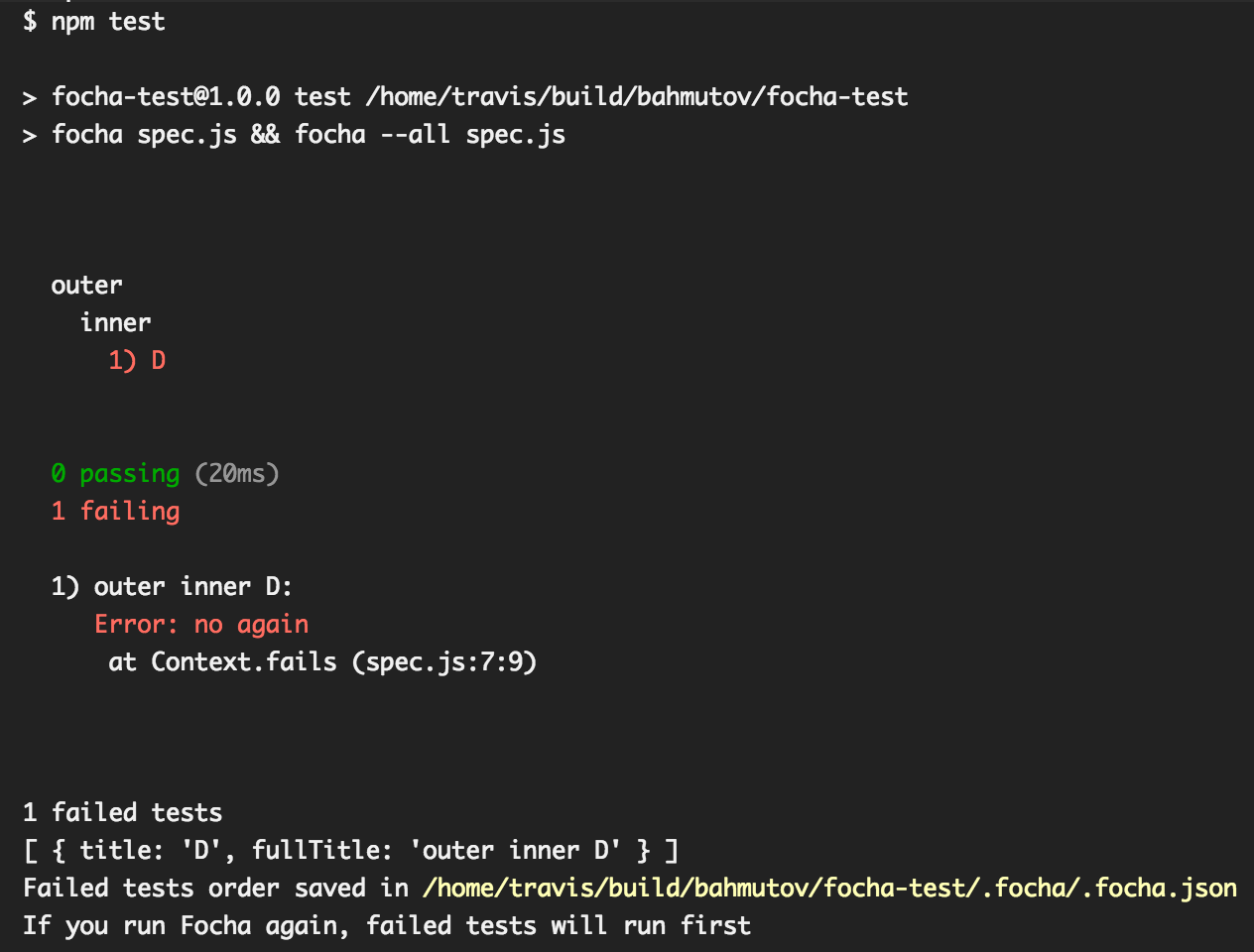

Imagine we modify the code just a little - the failing test still fails, and push the code.

1 | const passes = () => {} |

The CI runs just the failing test in builds/244784772 - we quickly know that our code modification has not solved the problem

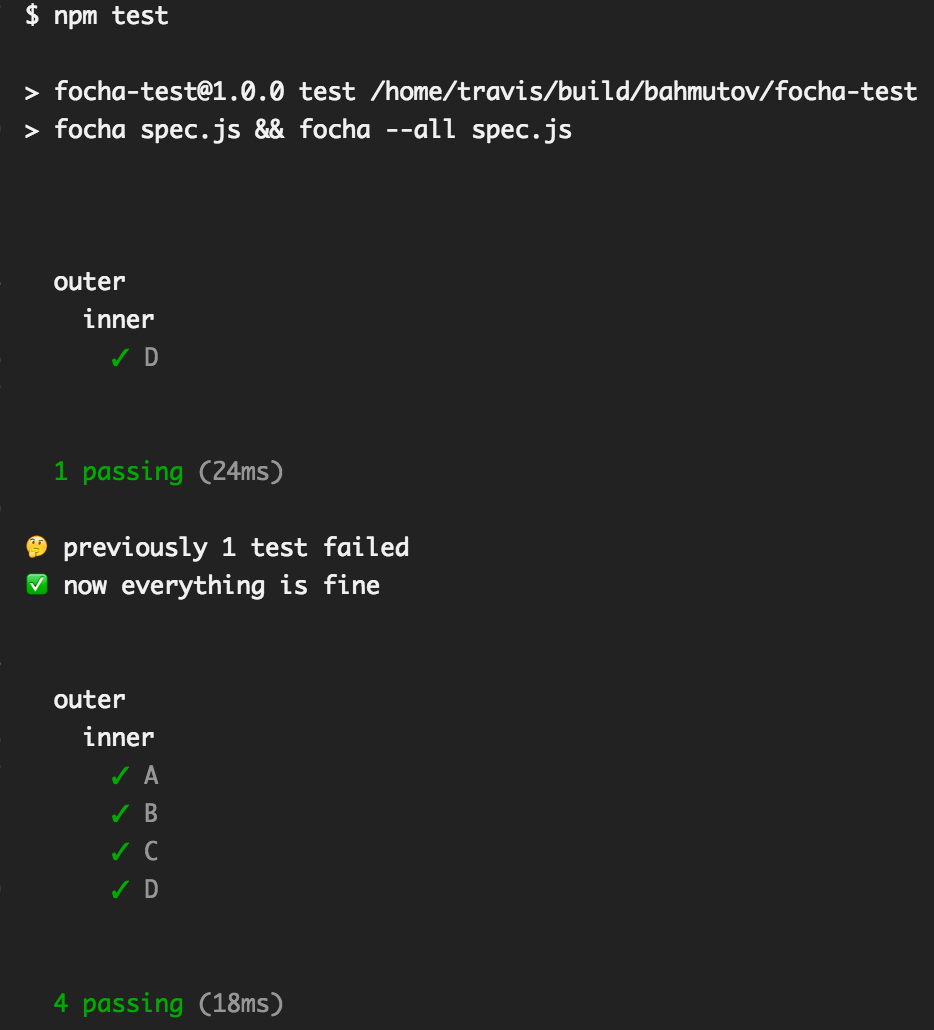

Finally, let us fix the spec and observe CI

1 | - it('D', fails) |

The CI runs the failing test - it passes in builds/244786471, and then runs all the tests to make sure the project is 100% green.

Remember: Failing Mocha = focha

Happy testing!