This blog post shows how I cut the execution time of a GitHub Actions workflow from 9 minutes to 4 minutes by running E2E test jobs in parallel.

- The initial workflow

- Split the workflow: first attempt

- Split the workflow: second attempt

- Parallelization

- Dominant spec

- The final result

The initial workflow

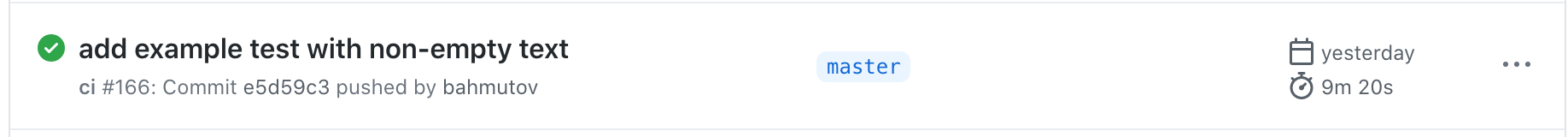

In my repository bahmutov/cypress-examples the testing workflow has reached a tipping point: 9 minutes of running time.

For me, any CI workflow longer than 3 minutes is too long, especially for a simple project like the cypress-examples. After all, it does only a few things serially:

- installs dependencies

- runs all Markdown specs with E2E tests

- converts Markdown specs into static pages via Vuepress

- runs the extracted JavaScript specs to run against the built Vuepress site

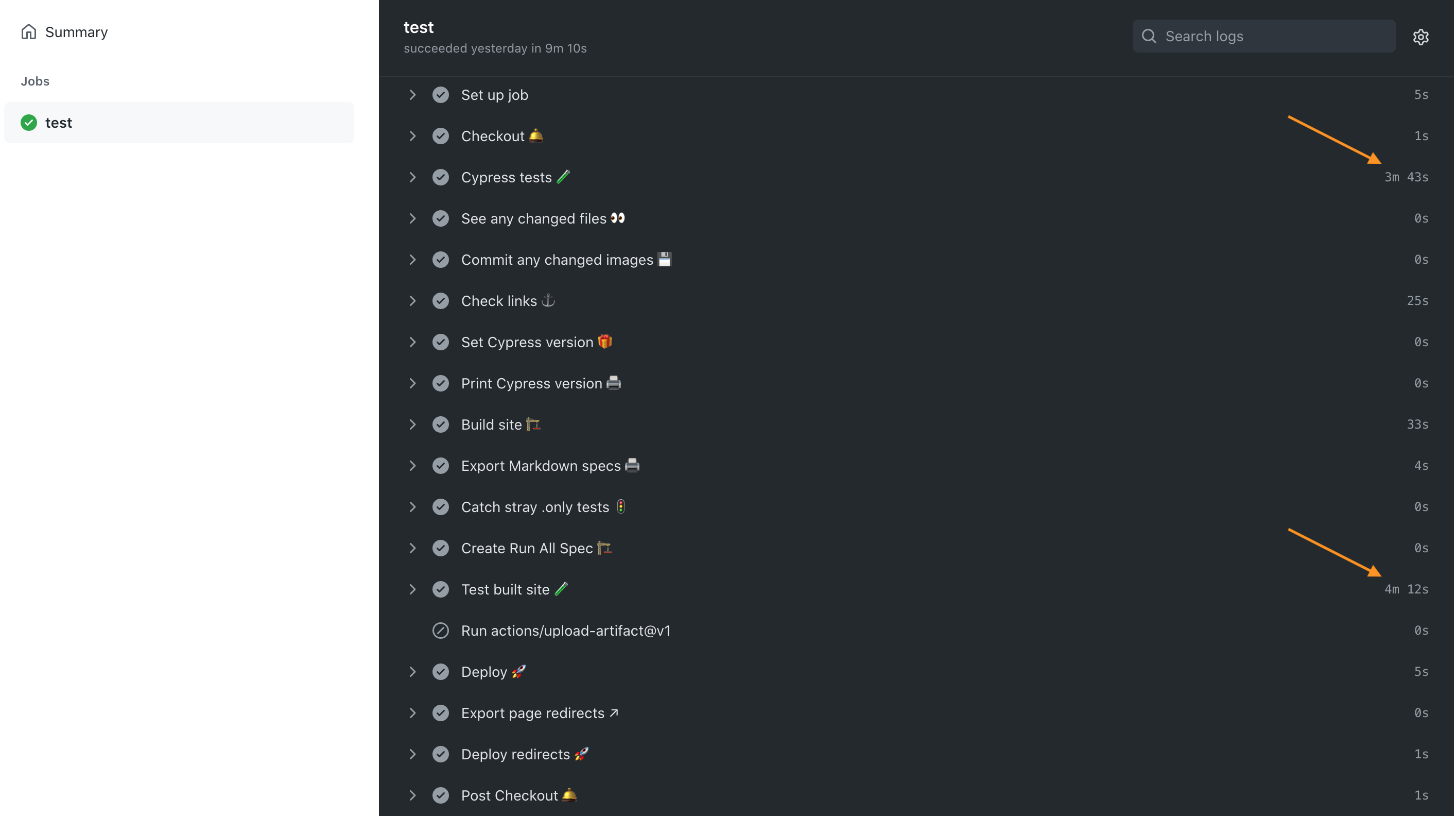

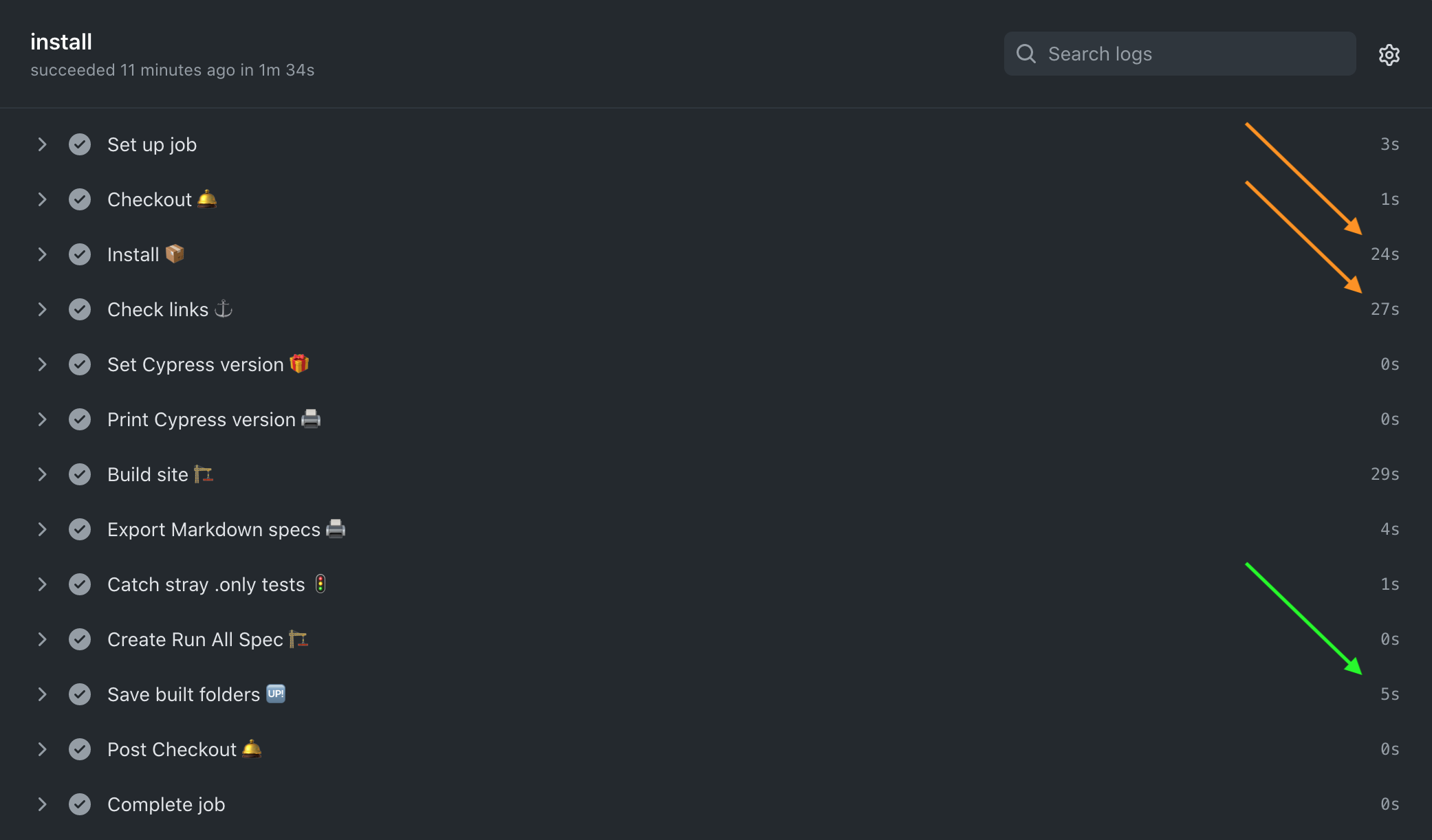

The steps that take the longest are highlighted in the workflow timings below.

Can we optimize the workflow to speed it up? Can we run the Markdown tests and the JavaScript specs in parallel?

Note: the Markdown specs in this repository are running E2E Cypress tests from the Markdown files.

Split the workflow: first attempt

We should split the long workflow into several jobs. The first job should install the dependencies, then we can run tests in parallel. We can pass the installed dependencies using GitHub artifacts actions.

1 | jobs: |

The test job can download the artifacts:

1 | test-markdown: |

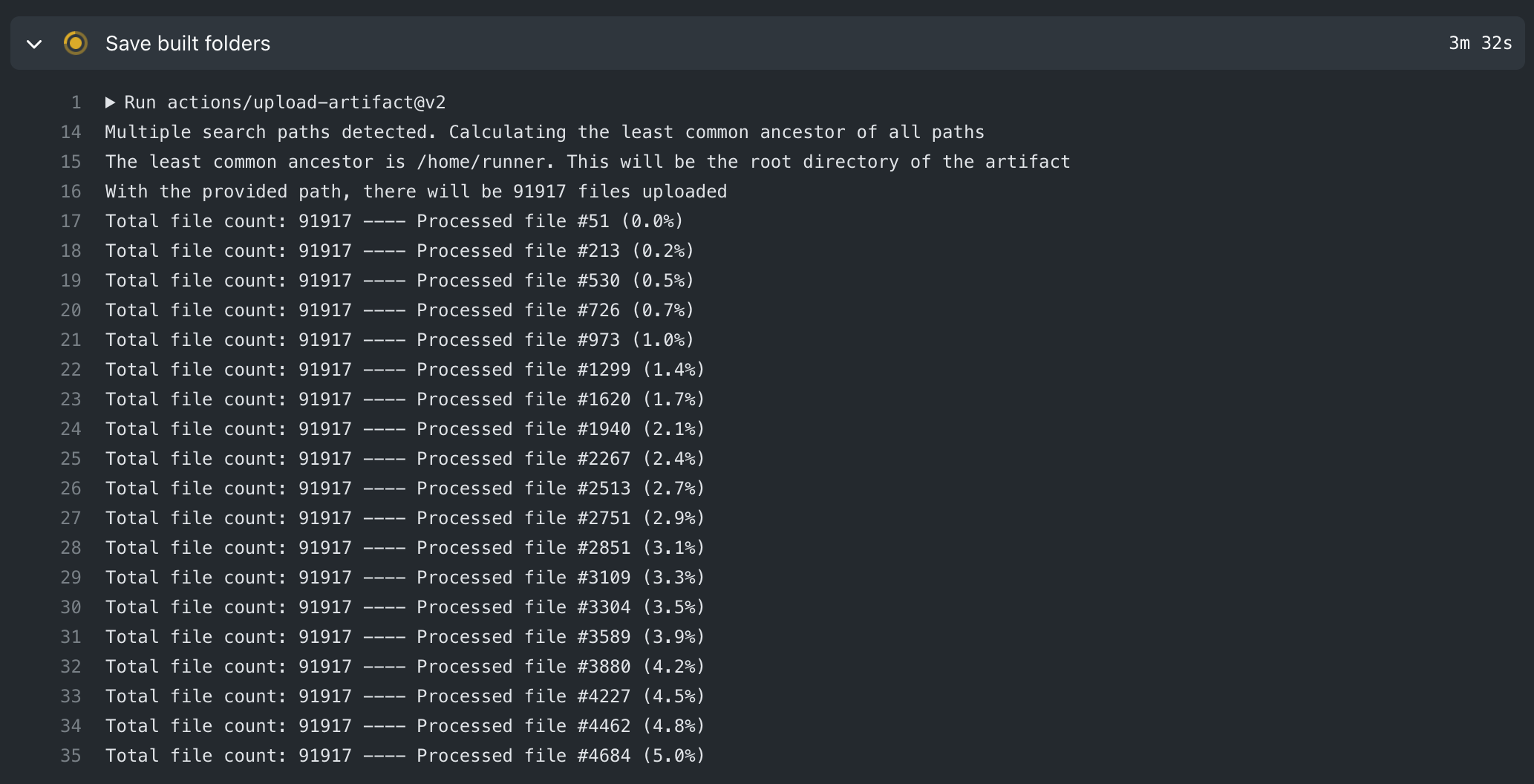

The workflow runs ... and is super slow. The upload of node_modules, and Cypress binary folder, and the built folders takes forever.

Turns out, uploading and downloading folders with lots of files, like node_modules or ~/.npm is super slow, especially compared to actions/cache that can successfully restore cached NPM and Cypress binary dependencies much much faster.

Split the workflow: second attempt

Let's rethink our strategy. We have 3 types of files and folders in our test jobs.

- the source files from the repository, like folders

./docs,./src, and files likepackage.json. These files can be quickly downloaded using theactions/checkoutaction. - the dependencies folders like

node_modulesand~/.cache/Cypresscan be quickly installed and cached between runs using thecypress-io/github-actionaction. We can install these dependencies and skip running tests using an argument

1 | - name: Install 📦 |

- folders modified or created by the test job itself. In our case the test code creates a new folder

./publicand modifies a few files in the./docsfolder. These two folders are small and can efficiently uploaded and downloaded using theactions/upload-artifactandactions/download-artifactactions.

Thus our workflow uses the different actions for different folders. The install job for example can do the following:

1 | install: |

The install job shows the time savings. Restoring the dependencies is fast - because most of the builds run with the same lock package files, thus avoiding the full re-install. Next, the link checks takes half a minute (we can optimize it later). Finally, saving the built folders takes only 5 seconds (the green arrow)

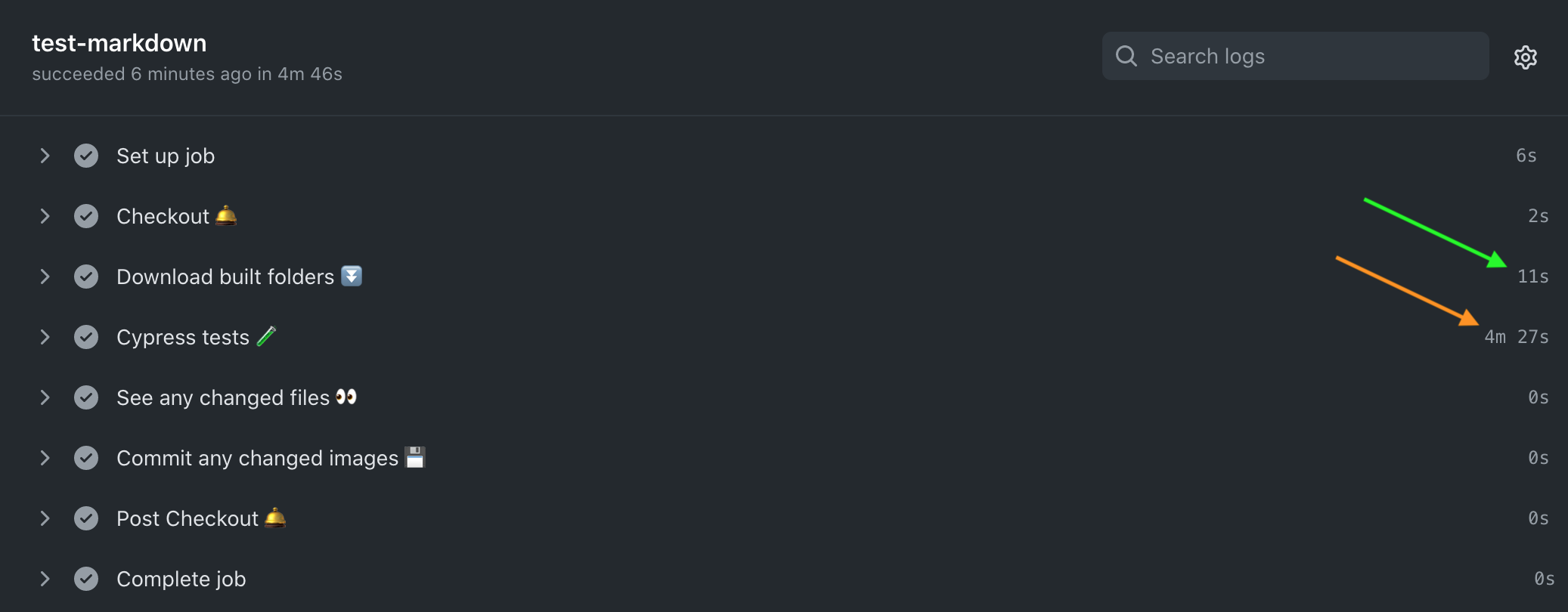

Let's take the job that runs the Markdown tests. It needs to check out files, download the built folders, install dependencies, and run tests.

1 | test-markdown: |

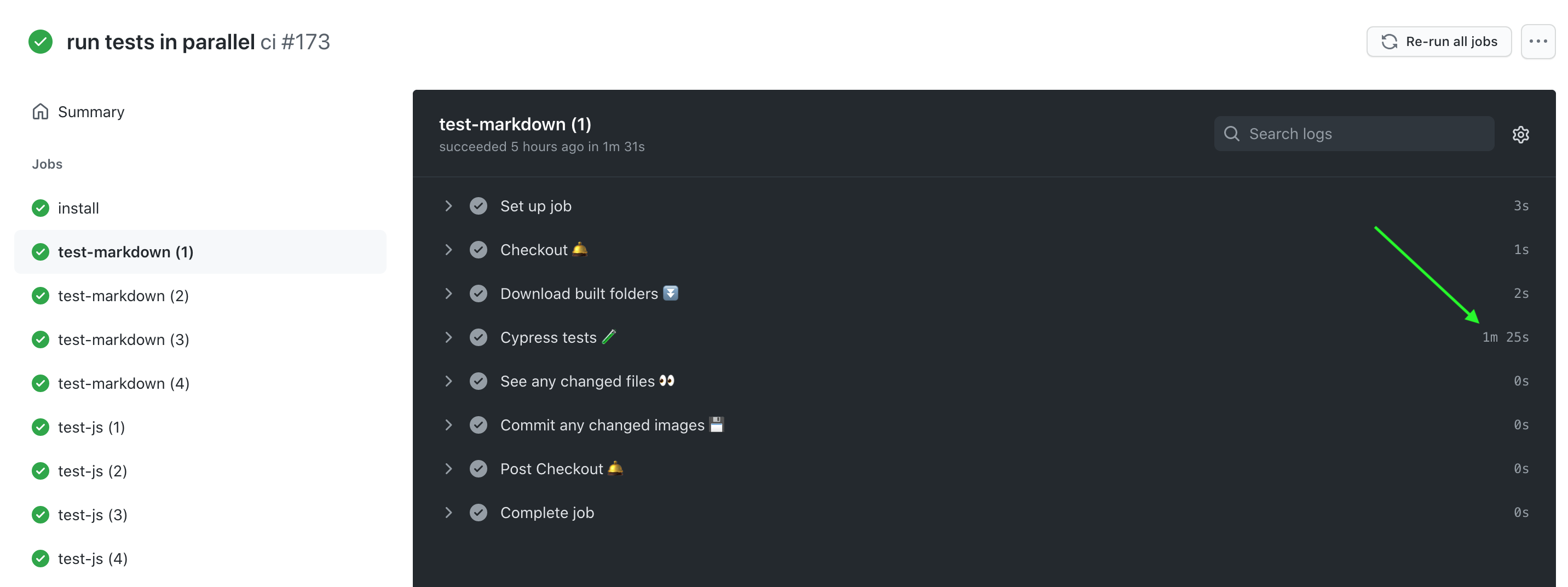

The job only takes a few seconds to download the built folders, the rest is running the multiple Markdown spec files.

There are 31 Markdown files that are executed serially, we will split them up later.

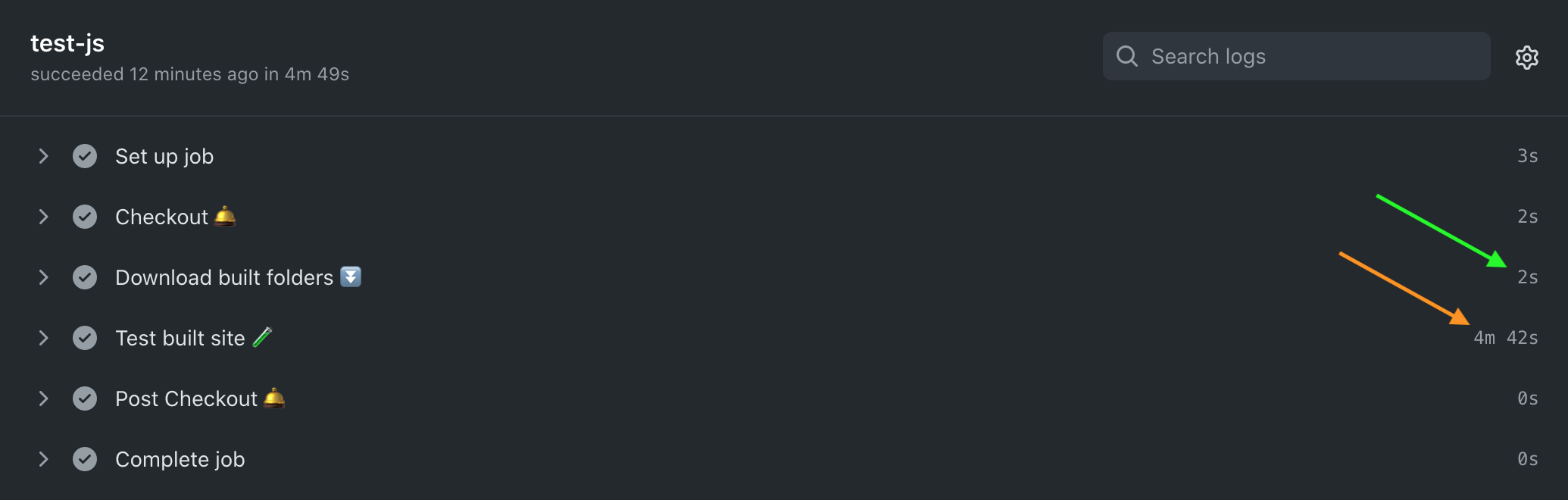

The test job that runs the exported JavaScript spec files similarly runs after the quick setup

Note: technically we could have passed the installed dependencies folders node_modules and ~/.cache/Cypress using actions/cache module instead of passing ~/.npm and ~/.cache/Cypress folders and re-installing them using cypress-io/github-action. But the time savings would be minimal, and the complexity of remembering the syntax are not worth it. Using cypress-io/github-action always wins by its simplicity.

Now that we know how to split workflows and pass folders from job to job, we can make the entire run faster using Cypress parallelization

Parallelization

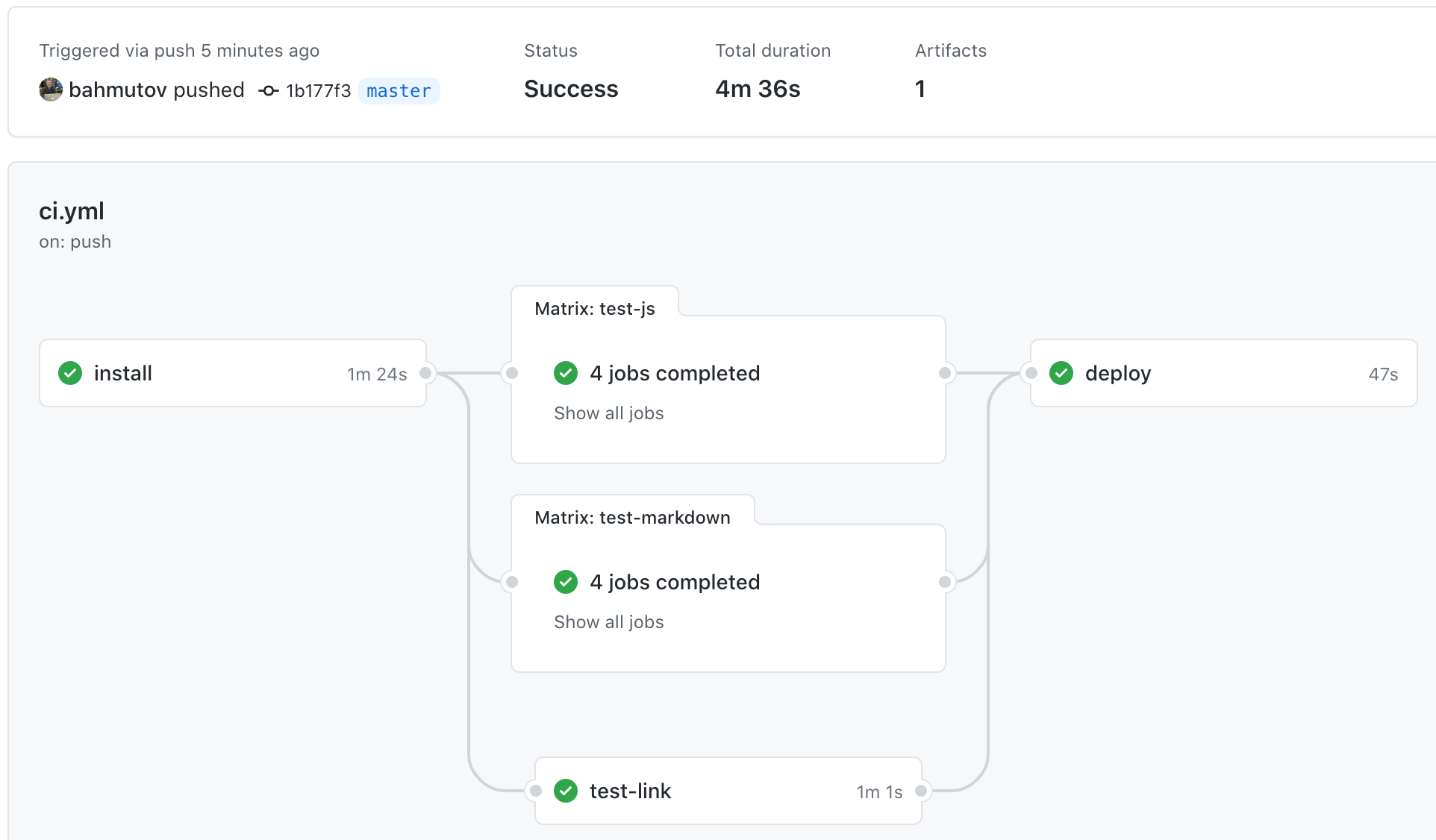

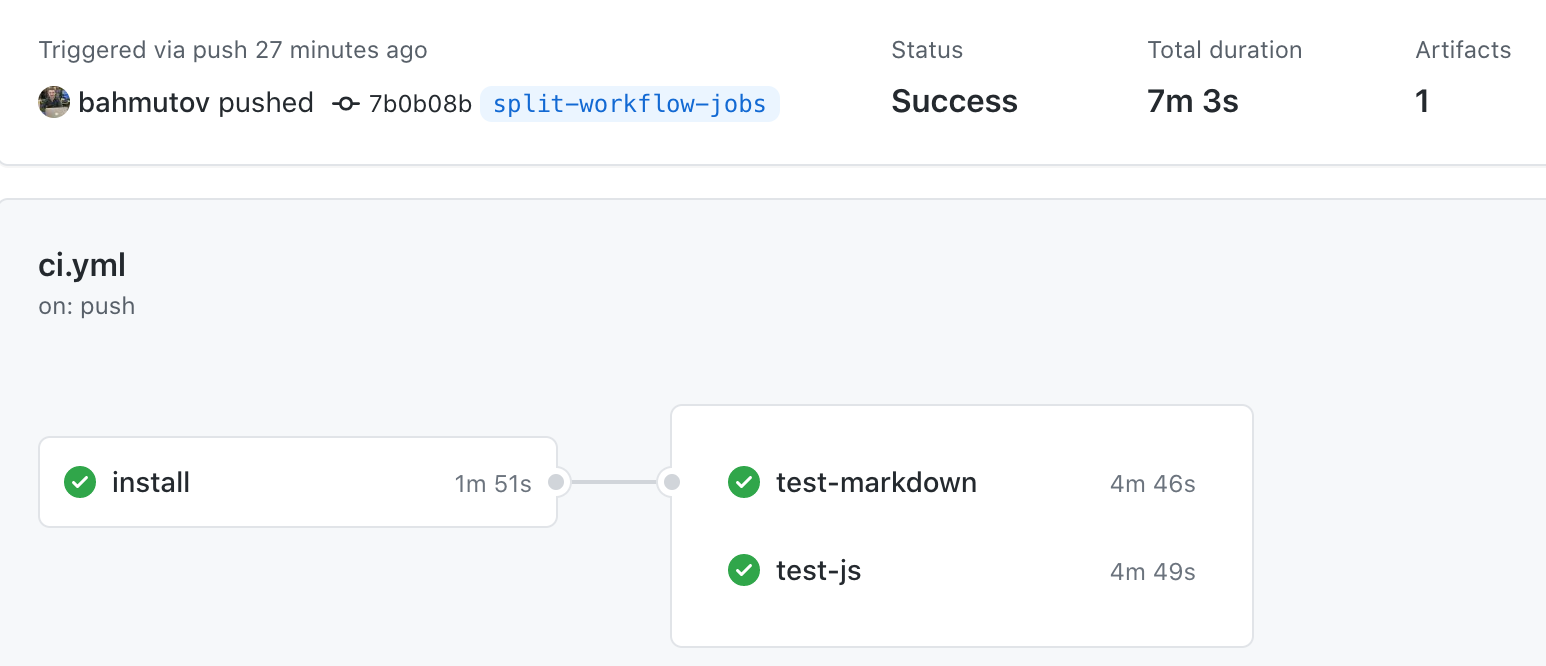

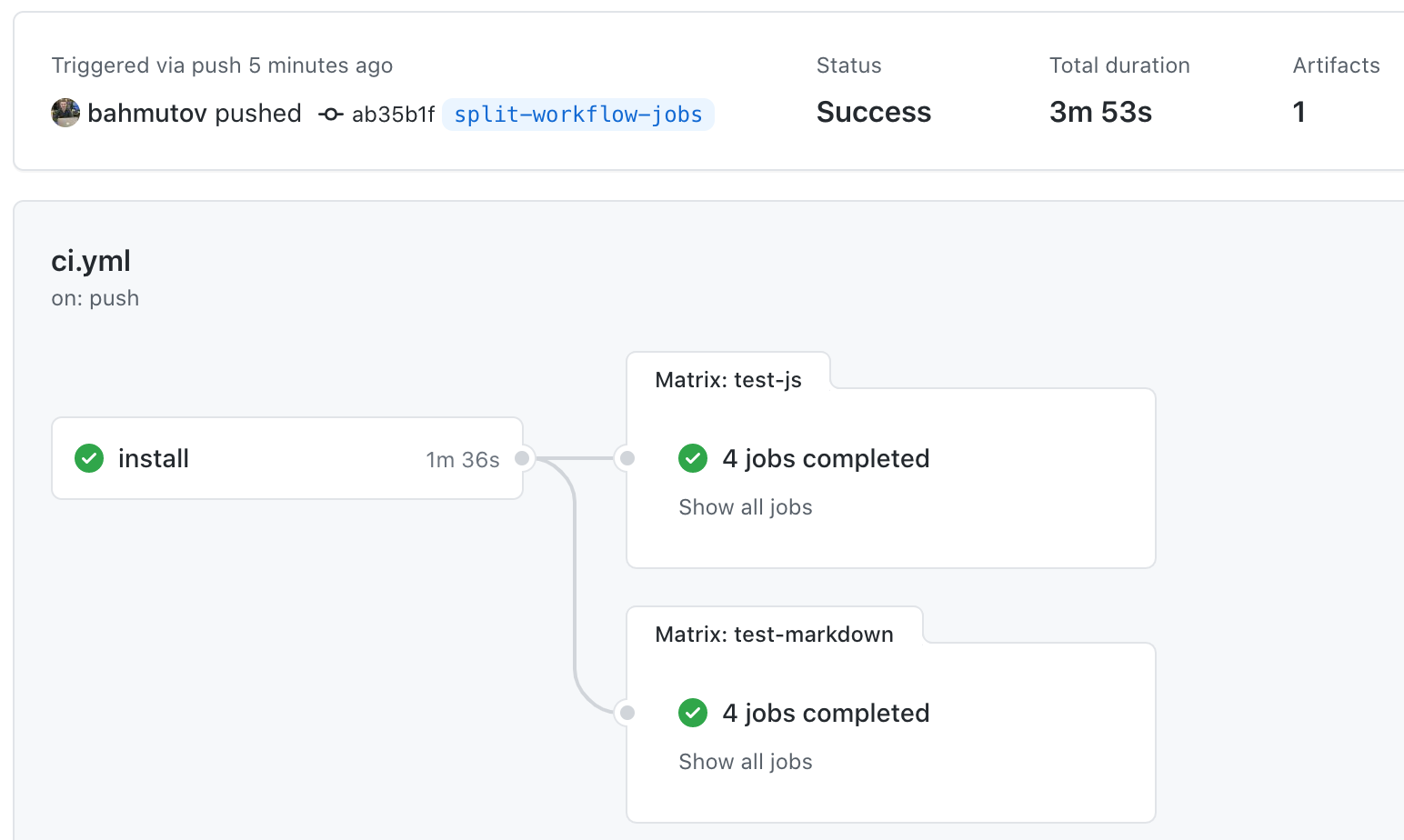

Our current run executes the Markdown and JavaScript specs in parallel, using the common install job.

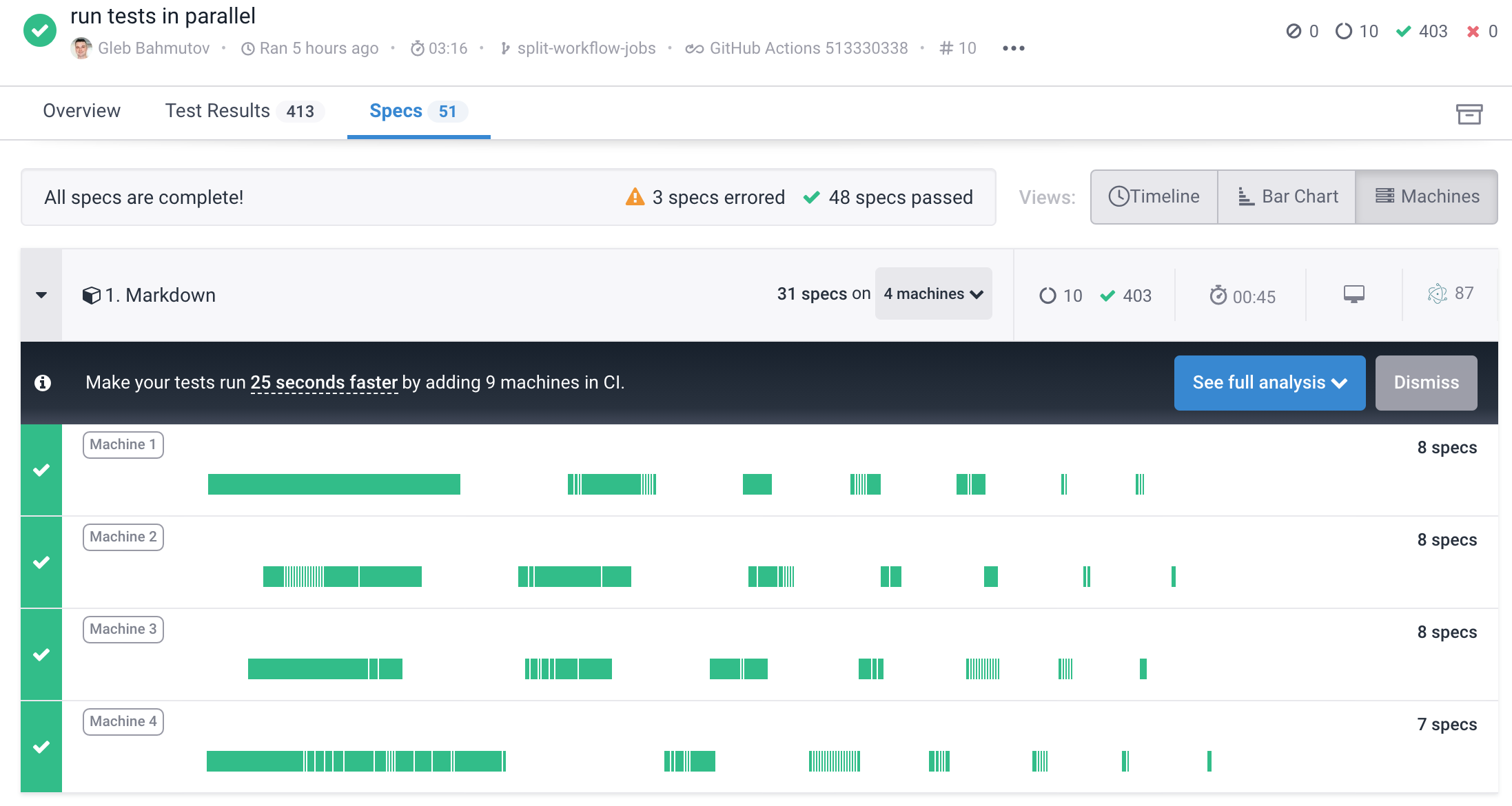

First, let's determine if running tests on several machines is worth the trouble. The Cypress Dashboard (parallelization requires the Dashboard subscription) shows how adding more machines would affect the total test run durations.

Wow, running the tests in parallel would be much faster!

Let's change the Markdown test job to run tests on four machines. We need to add to the job's definition strategy: matrix section and parallel: true parameter to the cypress-io/github-action command:

1 | test-markdown: |

Tip: you can also check out the cypress-io/github-action parallel example.

We can add the same parameters to the test-js job. The actions UI shows the changed timing:

Our total time went from 7 minutes to under 4 minutes. Not bad, let's see the individual testing jobs. There are 4 Markdown testing jobs, we can see that each one is around 90 seconds.

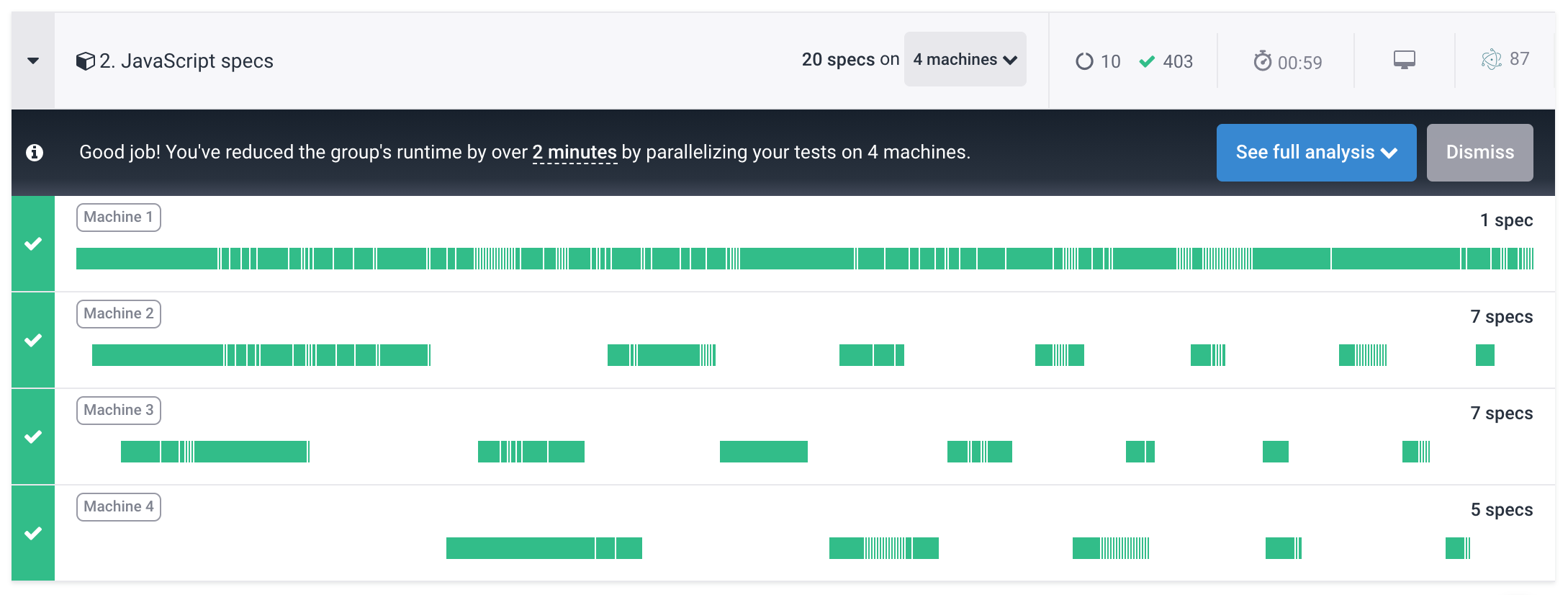

If we look at the Cypress Dashboard view of the specs by machine, we can see that the specs were distributed pretty well - every machine was utilized approximately the same.

Note: you can ignore the warning "3 specs errors". There are specs that do not run directly from the Markdown file and can only run after being converted into "plain" JavaScript specs.

Note 2: the Cypress Dashboard reports the run duration between the start of the first spec and the end of the last spec. The GitHub Action UI shows the total job duration, which includes the overhead of spinning the container, installing dependencies, passing the built folders, and finally starting the Cypress process. Thus the 50 seconds on the Dashboard become 1min and 30 seconds on GitHub.

Dominant spec

There is not additional benefit in adding more machines to our workflow matrices. The Markdown specs job is running in parallel with JavaScript specs job. The JavaScript specs job runs across four machines. We can see from the machine utilization reported by the Cypress Dashboard that the running time is already at its minimum. There is a single concatenated specs file that runs on one of the machines, and takes the entire duration. This concatenated specs is equivalent to clicking the "Run All Specs" button in the Test Runner. Because it takes longer than all other specs split across the other three machines, there is no sense in adding more machines. The additional machines will just finish faster, but the total job duration will still be equal to the concatenated spec's duration.

The final result

The final workflow has the common install job, 3 different test jobs, all passing the built folders and the dependencies using the approach I have described above. The last job in the workflow deploys the static site, it waits for all other jobs, and runs only on the default branch.