When you have a long running Node.js server, it is bound to crash. Invalid input, lost connections, logic errors - there will be exceptions sooner or later. You want to know about every possible error your server encounters. I always try to use a crash reporting services, like Raygun or Sentry, and have written bunch of blog posts on how useful these services are.

In this blog post I will show how to properly test crash reporting. Just like testing cars requires crashing a few vehicles into a concrete barriers, testing the exception reporting code requires generating crashes on demand. Just like testing cars requires different collision types (head on, side, etc), testing exception reporting requires checking that errors of different types are reported.

My application is a typical Node.js server, like Express. We will add a GET api end point that will generate exceptions, and then we will check if our crash reporting dashboard displays them.

Let us start with a tiny server with a single end point. When we hit this end point we the server should crash.

1 | const express = require('express') |

1 | $ node server.js |

Sync exception

The first crash we want to simulate is throwing synchronously a simple Error. This will stop any further code in the same handler, but this will NOT kill the server!

1 | app.get('/crash', function (req, res) { |

1 | $ curl localhost:3000/crash |

Quick security note: by default Express returns the detailed HTML of the crash stack to the

caller. This is a security risk for production servers - we don't want to list all the modules used

when the crash happens. To quickly prevent the stack trace from leaking out,

start the server with NODE_ENV=production environment setting.

1 | $ NODE_ENV=production node server.js |

Much better!

In both cases, the server's terminal displays the full error stack

1 | $ node server.js |

Note that the server keeps on running, and we can execute more requests. A single crash inside the response middleware does not bring us down.

Async exception

In JavaScript we might get an error that is executed out of band with the current call stack. For example, the following error does NOT crash the execution stack, since it executes in its own little stack, scheduled via event loop

1 | app.get('/crash-async', function (req, res) { |

When we run this code we get into a weird situation. The client gets the right response

1 | $ curl -i localhost:3000/crash-async |

But the entire server crashes AFTER finishing the response.

1 | $ node demo/server.js |

Because the error is thrown from another execution stack, scheduled AFTER the callback function

finishes, we cannot just catch this error using the try/catch block.

1 | // does not work |

We will see how to handle these types of crashes properly in a little bit.

Rejected promises

Using promises is a great way to avoid callback hell. Yet they can be silent and deadly if we are

not diligent in attaching a .catch handler at the very end. For example, the following code

will throw an error that goes nowhere!

1 | app.get('/crash-promise', function (req, res) { |

If we run this code, the server simply times out. What is going on?

1 | $ node demo/server.js |

The original promise is rejected in Promise.reject(42) and we get into the second

callback where we are supposed to send the error response to the user.

But this error callback has a syntax error of its own - we don't have variable value declared anywhere.

1 | function () { |

The ReferenceError that is generated inside the above function is swallowed silently. Even worse, if we move the code around and have a similar error inside the success callback, it will also be silently swallowed.

1 | Promise.resolve(42) |

The error callback in this case only handles the rejection from the previous step, not from the parallel success callback.

In general, I advise to put the error callback by itself at the last position, instead of in parallel. Then it will handle any error from any of the previous steps.

1 | app.get('/crash-promise', function (req, res) { |

Now the user at least gets the error response

1 | $ curl localhost:3000/crash-promise |

For our error testing purposes we need both features. We want to reply to the user, but we need to make sure we know about all silently rejected promises even more. Thus our crash callback should simply generate a rejected promise without holding the response.

1 | app.get('/crash-promise', function (req, res) { |

Generate all crashes at once

To simplify testing any server, I have put the above three crashes into a single module one an include in any Express (or Koa) server as middleware. You can find it at bahmutov/crasher and simply use like this

1 | const express = require('express') |

When called, all three errors will be raised: sync and async errors, and an unhandled rejected promise. See the implementation for details.

Let us see now how to handle these errors without crashing the server, and how to report these errors to an external error monitoring service.

Receiving all errors in an Express app

We need to collect all three types of errors. For sync errors that happen while processing the request, we can just add another middleware In Express server we should put this middleware at the very last position of the route

1 | const express = require('express') |

Now we receive the error when the code crashes synchronously

1 | $ node demo/server-with-error-handling.js |

For async errors, we need to catch all out of band errors. Node has such capability, we just need to register a listener. This is not specific to the Express application, but to every Node process, see the Process module docs.

1 | // place somewhere in the beginning |

Now we are notified about all global asynchronous errors and our server no longer crashes!

1 | Example app listening on port 3000! |

Finally, to know about rejected promises, the new version of Node (v5) has another global event we can use called "unhandledRejection". We can handle it the same way as async errors.

1 | process.on('unhandledRejection', function (reason, promise) { |

If the reason for promise rejection is an instance of Error, then we print the message,

otherwise just print the reason value itself.

1 | Example app listening on port 3000! |

Great, all types of errors are handled without crashing the server.

Receiving all errors in a Koa app

Koa is a great step up from Express.

First, let us write an example Koa server that throws each type of error

1 | const koa = require('koa') |

We can run and crash this Koa server just like we could crash an Express one, except Koa does not send the stack on synchronous exception by default.

Let us handle the exceptions in Koa. Not only we can get the errors downstream from the first middleware callback, Koa also will emit the errors, so we can log them at a single location. See the example code at Koa wiki.

At the bare minimum:

1 | app.on('error', function (err) { |

1 | $ node demo/server-koa-with-error-handling.js |

If you want more details, we also have access to the request context object

1 | app.on('error', function (err, ctx) { |

1 | # curl localhost:3000/crash-sync |

If we handle an error in our middleware and still want to log / send it to crash reporting

service, we should emit it.

1 | function * syncError() { |

1 | $ node demo/server-koa-with-error-handling.js |

We have the best of both worlds - we can handle the errors gracefully and respond to the user, yet collect every such error.

Sending collected errors to the crash service

Once you setup or buy account on a crash reporting service, you should forward all collected errors there. For example, here is forwarding errors to Sentry using its Raven client. If the Sentry URL is not configured, the code just prints the error to the terminal.

1 | const raven = require('raven') |

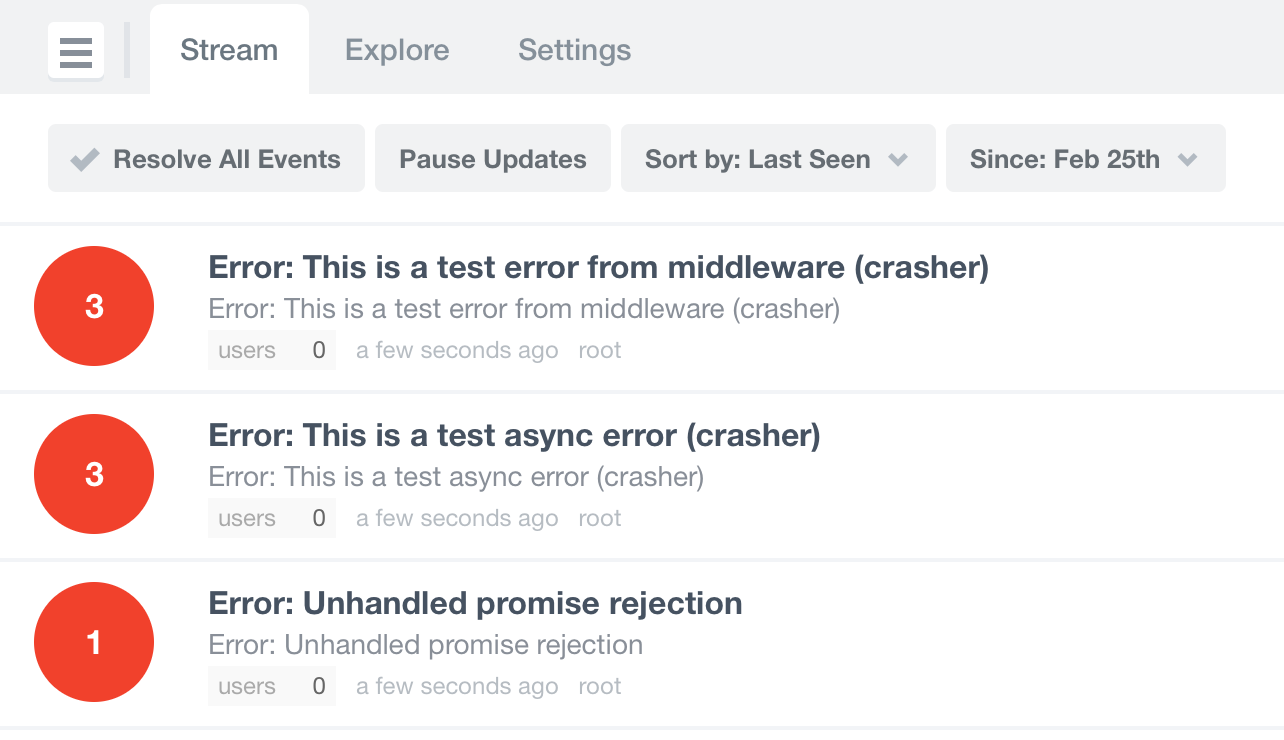

If you use crasher and everything is setup and deployed correctly,

when you execute curl <domain>:/crash you should see the errors on Sentry a couple of seconds later.

Other crash reporting services can be used in similar fashion.

Conclusions

- Server errors come in different flavors.

- You should set up a crash end point to test how your server actually handles the different types of errors.

Useful links

- crasher - middleware for Express / Koa servers that generates the above crash scenarios.

- raven-express - quickly forwards the Express crashes to the Sentry server.

- crash-reporter-middleware - figures out which crash reporting service is configured by inspecting variables and configures the appropriate reporter client.

- Raven client for sending errors to Sentry

- Raygun client for sending errors to Raygun.io

- crash-store - run your own crash receiver for Raygun client to send exceptions to.